The health effects of demand-side cost-sharing in European health insurance

Table of Contents

The rationale for demand-side cost-sharing in health insurance is to deter patients from using low value care. But if agents are cash constrained, demand-side cost-sharing can lead them to postpone or forgo valuable treatments. We use data on European (NUTS 2) regions to show that the interaction between poverty rate and out-of-pocket payments leads to unmet medical needs and higher mortality.

JEL codes: I11, I13, I18

Keywords: out-of-pocket payments, mortality, health insurance, poverty, unmet medical needs

How does this work? code

With this document, the reader can retrace the code which we used to produce the results, figures, tables etc. for this paper.

This file is written in Emacs org mode which allows us to combine text and code. The file is exported to pdf (via latex) and to html for the web-version. The web-version –which you are reading now– contains the sections tagged code which are not exported to the pdf version of the paper.

Here you can download the pdf of the paper.

For the export to html we use LaTeX.CSS with some small tweaks to make it compatible with the org-exporter that we use which is based on org-ref. The export of the org file to html is almost perfect, but some issues are not yet resolved. To illustrate, the html export has trouble with latex environments like align, split in equations etc. For the time being this is resolved by using multiple equation environments. Further, whereas latex drops the label on equations that are not cited, the html exporter is not able to do this. Hence, there are more numbered equations in the web-version of the paper. This is all a bit clumsy but otherwise works fine.

We use Python to program the model and PyMC for the Bayesian analysis. All these resources are open source and freely available. If you want to install Python, Anaconda is a good place to start.

To avoid replicating code that is used for different models, we use noweb. This is used as follows. First, we give the code block a name, like code-preamble. Then we want to use this code, we call the code block by <<code-preamble>>.

There is a separate file which describes how we get the data from Eurostat. The dataset itself is too big for github and can be found on DataverseNL.

The repository for the paper can be found here.

preamble code

######################################################### # This file is tangled from the index.org file in the root directory # the author runs the code from the index.org file directly in emacs # if you do not have emacs, you can run the code to generate the trace files # from this file # the file expects the following folder structure to run without problems: # the folder with the data should be located at: ./data/data_deaths_by_age_nuts_2.csv # the trace files are written to ./traces # figures are written to ./figures #########################################################

import xarray as xr import numpy as np import pymc as pm import pytensor.tensor as pt import pytensor import pandas as pd import graphviz as gr import arviz as az import scipy as sp import seaborn as sns import matplotlib.pyplot as plt plt.style.use('Solarize_Light2') from country_codes import eurostat_dictionary from tabulate import tabulate

We use the following versions of pymc and numpy:

print(pm.__version__) print(np.__version__)

5.1.2 1.24.2

loading data

We read in the data. We drop rows (combination of calendar year, NUTS 2 region, age and gender) where mortality measures (deaths, population or lagged_mortality) or oop (HF3_PC_CHE) and fraction of population that postponed treatment because it was too expensive (TOOEXP) are missing.

We select ages between age_min and age_max and calendar years between first_year and last_year. We drop rows where the number of deaths during a year exceeds the population at January 1st in that age-gender-year-region category.

age_min = 35 age_max = 85 age_range = np.arange(age_max-age_min+1)[:,np.newaxis] plot_age = np.arange(age_min,age_max+1) first_year = 2009 last_year = 2019 df = pd.read_csv('./data/data_deaths_by_age_nuts_2.csv') df.rename(columns={'at risk of poverty':'poverty',\ 'percentage_material_deprivation':'deprivation',\ 'UNMET':'unmet'},inplace=True) df.dropna(subset=['deaths','population', 'TOOEXP',\ 'HF3_PC_CHE','lagged_mortality'], axis=0, how ='any',inplace=True) df = df[(df.population > df.deaths) & (df.age >= age_min) & \ (df.age <= age_max) & (df.year <= last_year) &\ (df.year >= first_year)] df['mortality'] = df.deaths/df.population*100 \ # mortality as a percentage # lagged mortality as fraction of mean lagged mortality # per age/gender group df['lagged_mortality_s'] = (df['lagged_mortality'])/\ df.groupby(['age','sex'])['lagged_mortality'].\ transform('mean') #len(df)

1. Introduction

Most developed economies face rising healthcare expenditures. In many countries the healthcare sector grows faster than the economy as a whole (OECD 2021). One of the instruments that governments have to curb this expenditure growth is demand-side cost-sharing. The effect of demand-side cost-sharing on healthcare utilization is well known. As cost-sharing increases, healthcare becomes more expensive for the individual and demand for treatments falls. It is less clear whether and to which extent demand-side cost-sharing induces people to forgo low value care only (Newhouse and the Insurance Experiment Group 1993; Schokkaert and van de Voorde 2011).

It is commonly believed that health insurance subsidizes health consumption, incentivizing individuals to seek expensive treatments with limited health benefits. Economists refer to this phenomenon as moral hazard. When considering the social costs of such treatments, which exceed the individual’s out-of-pocket (oop) expenditures, it is advantageous to reduce moral hazard through increased demand-side cost-sharing. This trade-off involves balancing the efficiency gains resulting from reduced moral hazard against the increased risk to risk-averse individuals stemming from oop expenses.

This study aims to examine behavioral hazard, which occurs when cost-sharing discourages patients from pursuing valuable treatments (Baicker, Mullainathan, and Schwartzstein 2015). If patients choose to forgo treatments with higher value than the associated costs, it results in a reduction of overall social welfare. Specifically, we focus on situations where individuals opt out of or delay treatment due to its high oop cost.

The objective of this paper is to develop a model that can be estimated using aggregate data to assess negative health effects of demand-side cost-sharing. We are particularly interested in examining how cost-sharing can make valuable treatments unaffordable, thus reducing overall health outcomes. We begin by considering two key ideas. First, if demand-side cost-sharing disproportionately affects individuals with lower incomes, the reduction in access to valuable healthcare due to increased costs will be more pronounced among this group. Higher income individuals, who have sufficient resources, are more likely to pay for valuable treatments even if they become costly in terms of oop expenses. Individuals with lower incomes may face liquidity constraints that force them to postpone or forgo treatment. Second, if there is a significant decline in demand for high-value care, we expect to observe this trend in mortality statistics at the aggregate level.

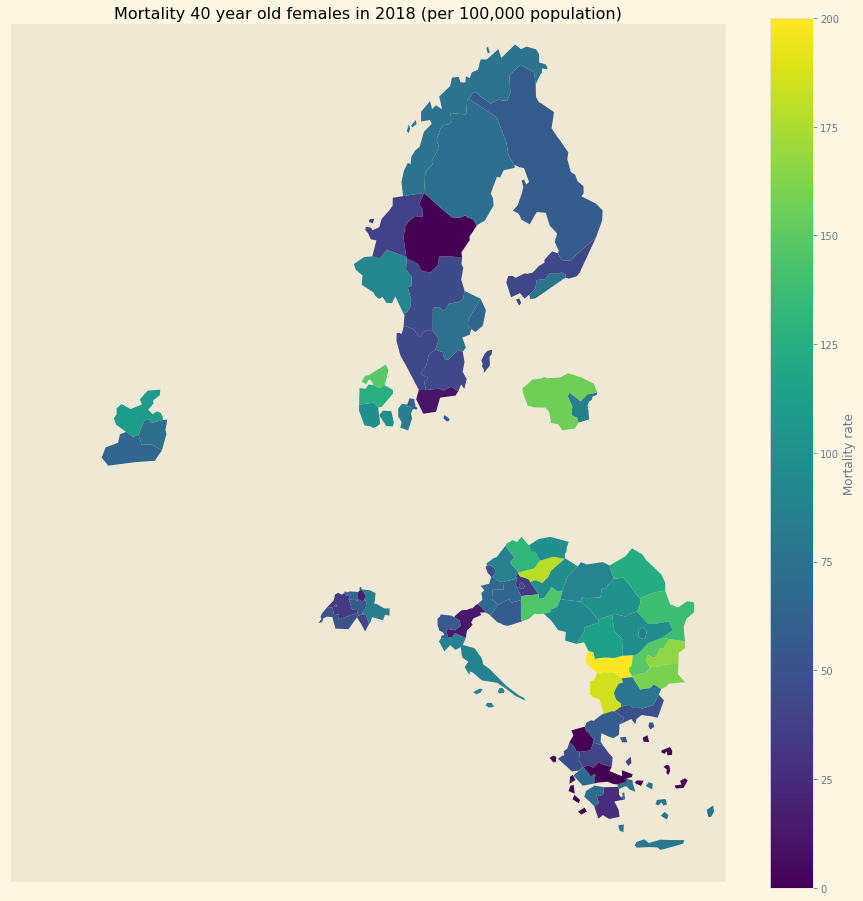

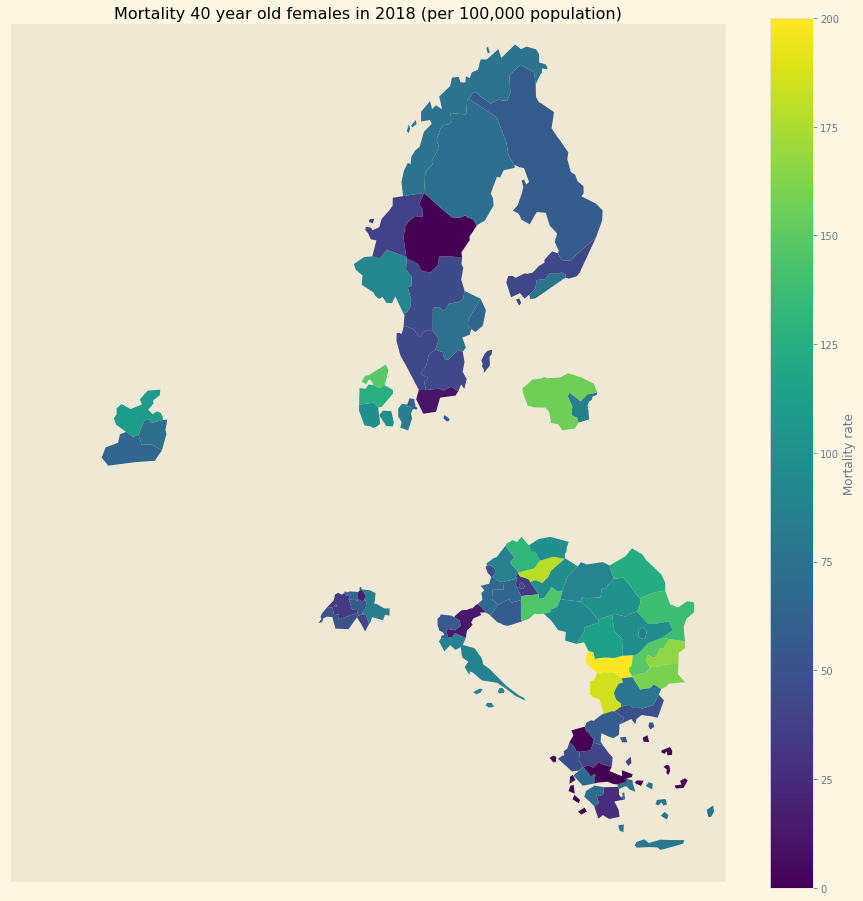

Figure 1: Mortality in NUTS 2 regions in Europe

To identify the health effects of cost-sharing we use mortality statistics of Eurostat at the NUTS 2 (Nomenclature of Territorial Units for Statistics) regional level. Figure 1 illustrates NUTS 2 regions used in this paper. Mortality varies by region/year/age/sex. In regions where the percentage of people on low income is high and demand-side cost-sharing is high, we expect to see high mortality. Since we have panel data, we control for NUTS 2 (and hence country) fixed effects.

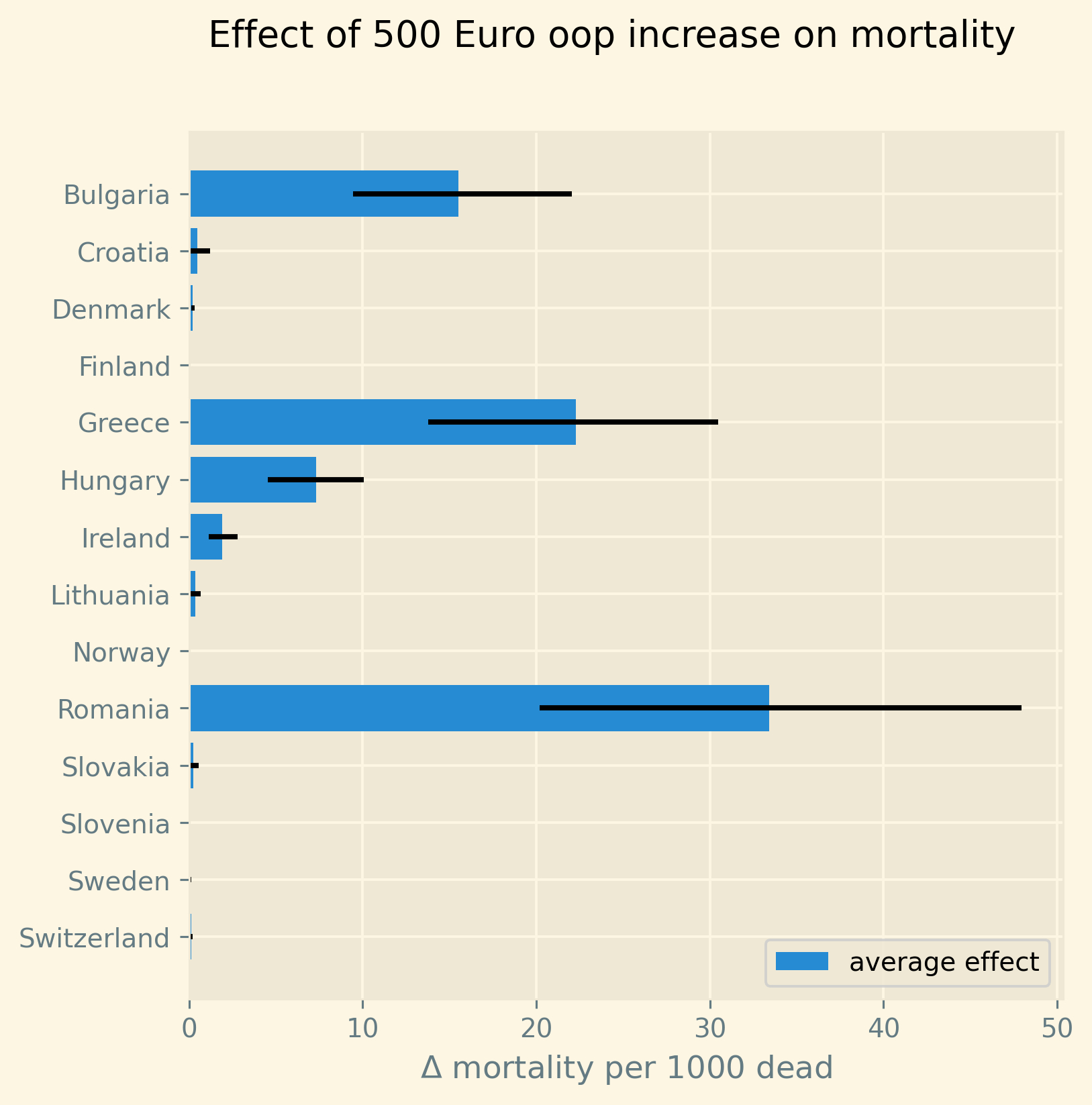

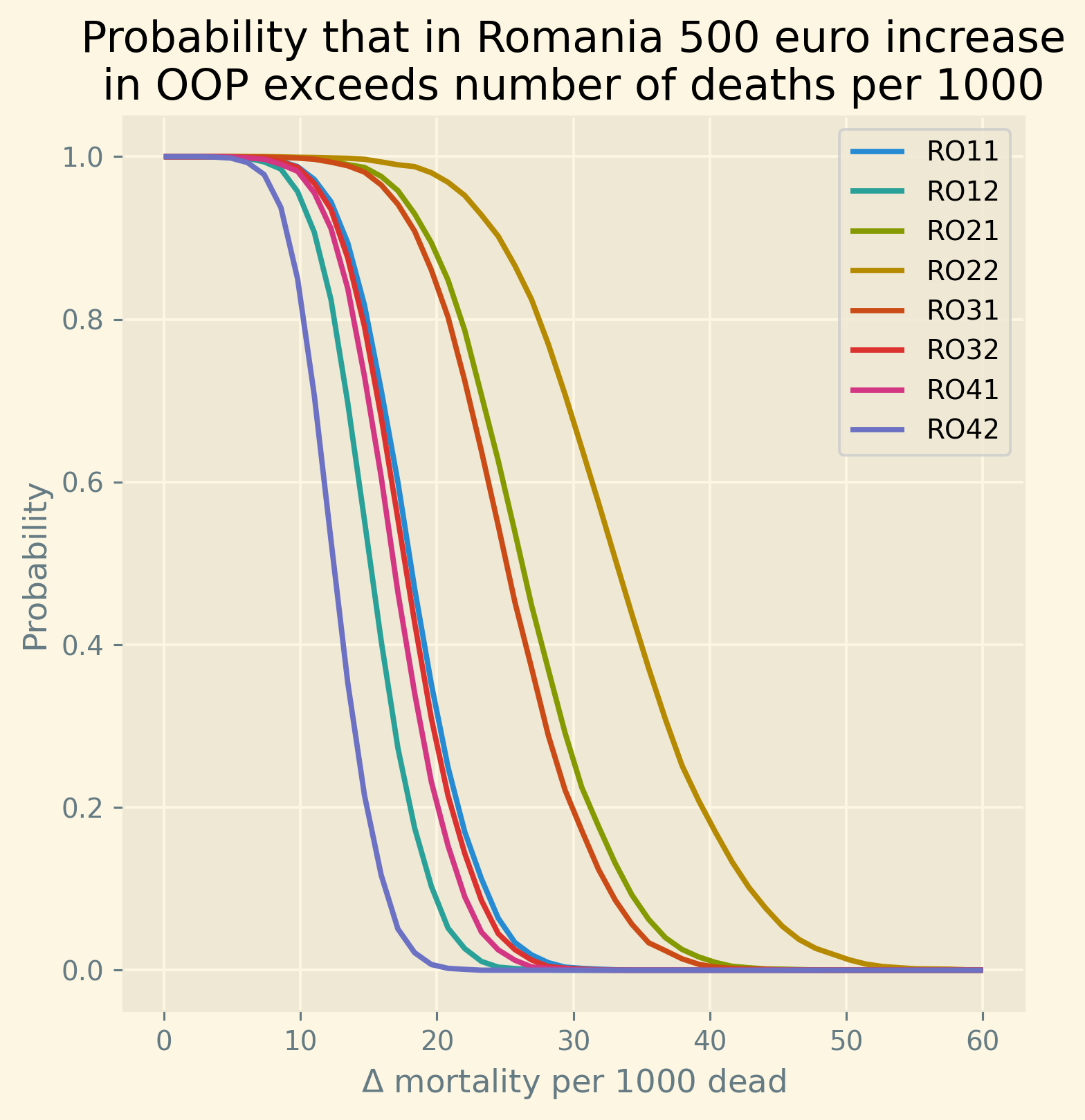

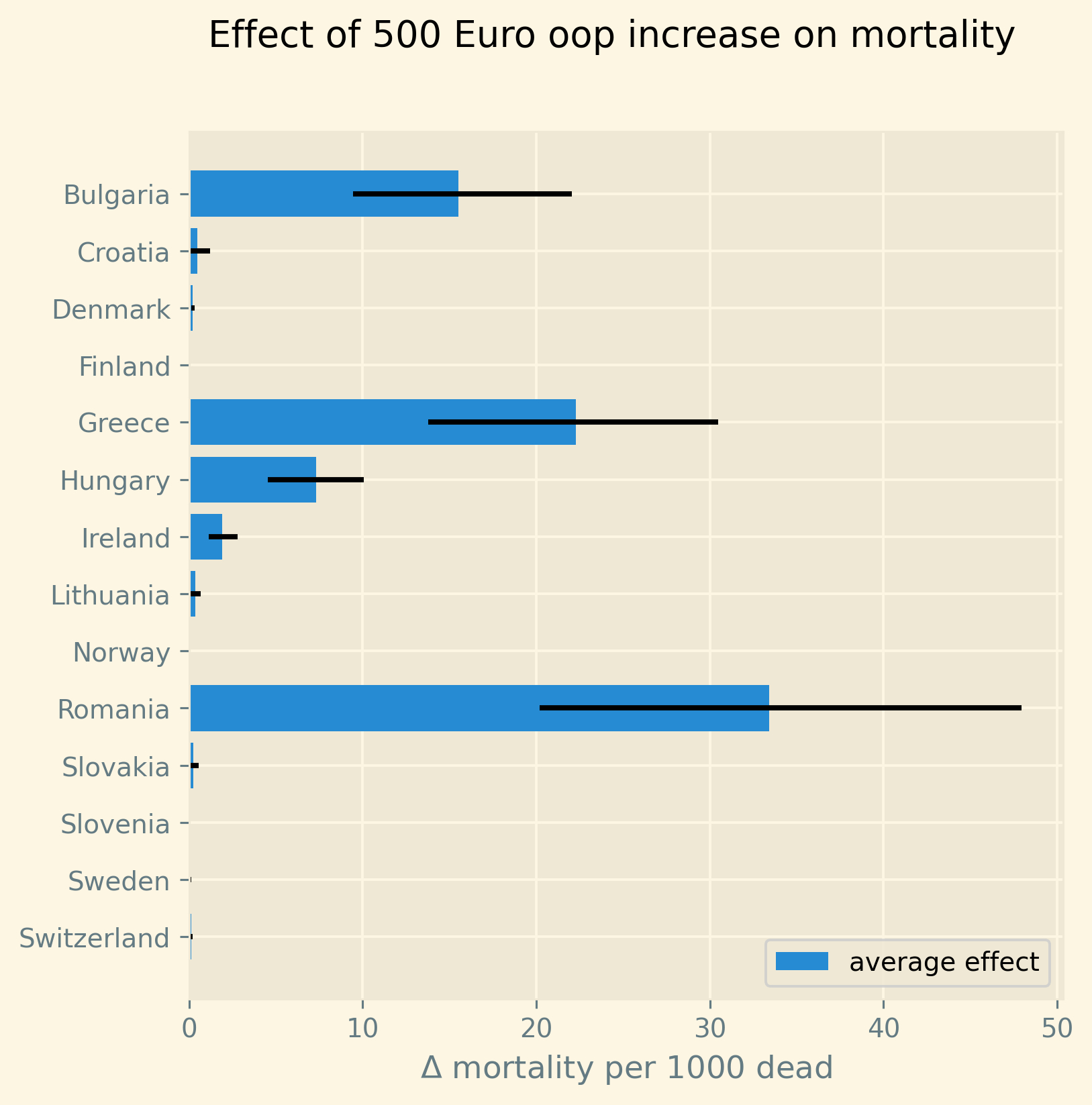

Despite the forthcoming analysis, we present a summarized overview of the results in Figure 2. Our analysis focuses on the NUTS 2 regions within each country where poverty rates are highest, as these regions are likely to experience the strongest impact at a regional level. Employing our estimated model, we simulate the effect of a 500 euro increase in oop expenses on mortality rates. We express this effect as the increase in deaths (attributable to the rise in oop) per 1000 deceased individuals. We adopt this measure for two reasons. Firstly, mortality rates are –fortunately– quite low, thus any alteration in oop expenses will have a relatively small impact on mortality. By reporting the increase in mortality per 1000 deceased individuals, we facilitate the interpretation of these numbers. Additionally, we present this mortality measure for diseases that exhibit similar orders of magnitude, such as pneumonia. Secondly, in our model, this measure per 1000 deceased individuals is age-independent. In other words, the number of individuals dying from the increase in oop expenses may vary across different age groups (as 25-year-olds are less likely to die than 80-year-olds). However, the proportion of individuals dying as a result of the oop expense increase, relative to the total number of deceased, remains constant across age and gender. This approach allows us to reduce the number of parameters requiring estimation and fits the data quite well.

The blue bars in the figure indicate the average simulated effect of the 500 euro increase for each country’s respective region, while the black lines represent the 95% probability interval of the effect. It is worth noting that the four countries with the highest poverty levels in our sample, namely Bulgaria, Greece, Hungary, and Romania, exhibit the most substantial effects. For these countries, the 95% probability interval of the effect is noticeably different from zero. Conversely, the regions of the Scandinavian countries, Slovenia, and Switzerland demonstrate effects close to zero at a regional level due to their very low poverty rates.

Figure 2: Increase in number of deaths per 1000 dead due to a 500 euro increase in out-of-pocket payment for the region in each country where poverty is highest. Bars present the average predicted effect and black lines the 95% prediction interval.

The results suggest the following policy implications. An increase in oop has a measurable effect on mortality in regions where poverty is high. Policies to address this include a scheme that subsidizes healthcare expenditure (on top of health insurance) for poor people; e.g. through means-tested cost-sharing. A downside of such a targeted intervention is a higher marginal tax rate at low income levels contributing to a poverty trap. Indeed, if by earning more, the oop subsidy falls, the increase in net income is reduced. This makes such an increase in income less attractive. Alternatively, a government can introduce co-payments that vary with the cost-effectiveness of the treatment. Treatments with high value added would then feature a low co-payment to prevent people from postponing valuable care. This can also help to reduce mortality associated with cost-sharing (Chernew et al. 2008).

This study is not the first to examine the impact of demand-side cost-sharing on mortality. There is a collection of recent studies employing innovative methodologies and primarily relying on individual-level data to establish the causal effect of health insurance on health and mortality. There are challenges associated with identifying the effect of health insurance on health outcomes using individual-level data. To illustrate, there is a selection bias where individuals with poorer health tend to obtain more comprehensive health insurance due to higher anticipated medical expenditures. This bias can distort results in a way that individuals with more extensive coverage may experience adverse health outcomes, such as higher mortality rates.

Several studies have utilized the Medicaid eligibility expansion under the Affordable Care Act, which was implemented at various times across different states in the US, enabling the implementation of a difference-in-differences identification strategy. These studies have demonstrated that the Medicaid expansion (resulting in more comprehensive health insurance coverage) has led to a reduction in mortality rates (Borgschulte and Vogler 2020; Miller, Johnson, and Wherry 2021). Other analyses focus on Medicare Part D prescription drug coverage, in which end-of-year pricing displays non-linear patterns based on expenditure (Chandra, Flack, and Obermeyer 2021). The primary finding indicates that increases in oop costs for drugs result in reduced drug use, including the use of high-value treatments, subsequently leading to higher mortality rates. Goldin and colleagues conducted an experimental study in which individuals subject to the Affordable Care Act’s health insurance mandate were reminded of potential financial penalties for non-compliance. This reminder prompted individuals to opt for health insurance instead of remaining uninsured, and as a result, mortality rates were lower among those who received the reminder compared to the control group who did not (Goldin, Lurie, and McCubbin 2020).

Our paper adds to this evidence of negative health effects of demand-side cost-sharing in the following way. First, we utilize European data instead of US data. European countries tend to have a more homogeneous health insurance system compared to the diverse range of options available within the US. In the US, individuals may have employer-sponsored insurance, Medicaid or Medicare coverage, or no insurance at all, making it challenging to detect aggregate-level effects of changes in, say Medicaid coverage. On the other hand, European countries tend to have nationally determined health insurance features, resulting in a higher level of consistency. For instance, the OECD Health Systems Characteristics Survey shows that more than 90% of the population in European countries obtains primary healthcare coverage through automatic or compulsory insurance, with percentages exceeding 99% or 100% in most cases. In contrast, the corresponding figure for the US is less than one third. Therefore, country or region-wide statistics in Europe provide a better representation of the insurance situation for most citizens compared to the US, although they may not capture all individual nuances such as the purchase of complementary insurance.

Second, our paper highlights the association between high mortality rates and regions characterized by both high oop costs and poverty. This finding aligns with previous research indicating that healthcare utilization is influenced by individuals’ liquidity constraints. Individuals with lower incomes tend to defer or forgo valuable treatments when these are expensive (Gross, Layton, and Prinz 2020; Nyman 2003). Our focus on low incomes may result in an underestimation of the mortality effect of cost-sharing, as individuals with higher incomes may also forgo necessary treatments due to oop expenses (Brot-Goldberg et al. 2017; Chandra, Flack, and Obermeyer 2021). However, in this case, the decision to forgo treatment is more likely driven by factors other than liquidity issues.

Third, our study utilizes the regional structure of Eurostat data. We examine the impact of the interaction between oop expenses and poverty on mortality within specific age-gender groups at the NUTS 2 regional level. This approach helps address potential endogeneity concerns. For example, a country with an overall low health status may implement generous health insurance policies to improve population health. This direction of causality conflicts with our research focus. By analyzing variations in health within regions in relation to oop costs and poverty, while controlling for other factors using NUTS 2 fixed effects, we mitigate this issue. Moreover, examining health and mortality within each age cohort allows us to account for variations in age distributions across countries and regions. Other potential confounding effects when using regional data are discussed in a separate section below.

Fourth, Eurostat variables derived from the EU-SILC survey enable us to concentrate on the relevant causal mechanism. The survey includes questions about unmet medical needs in the past months and the reasons for these unmet needs. One of the reasons cited is the cost, which leads individuals to postpone or forgo treatment. This information allows us to simultaneously estimate the percentage of individuals in a NUTS 2 region who forgo treatment due to cost and the effect of unmet medical needs on mortality. Through this approach, we capture the relationship between high interaction effects of oop costs and poverty, an increased number of individuals postponing treatment due to cost, and higher regional mortality rates.

Finally, our paper distinguishes itself from the literature on the impact of income and wealth on health that typically relies on cross-country data (Chetty et al. 2016; Mackenbach et al. 2008; Semyonov, Lewin-Epstein, and Maskileyson 2013). This literature generally finds an association between lower income and wealth and poorer health status, although the exact causal mechanism remains unclear (Cutler, Lleras-Muney, and Vogl 2011). Two potential mechanisms have been proposed: higher income leading to increased expenditure on treatments and consequently better health, or healthier individuals having higher productivity and earning higher incomes. Our approach, incorporating fixed effects and using survey questions on unmet medical needs, allows us to focus on the mechanism where a high interaction effect between oop costs and poverty leads to unmet medical needs, resulting in poorer health status and higher mortality rates.

In summary, compared to studies utilizing individual-level data, our approach provides both a broader overview –based on a number of countries, instead of, say 65 year old Medicare users in the US– and less precise estimation of the effect of insurance generosity on mortality. Although we do interpret our results using the size of the effect, our main goal is to establish that an increase in oop costs in a poor region increases mortality. In particular, we quantify how sure we are that this effect is positive.

The next section presents a model explaining the relationship between the variables mortality, poverty, oop expenditure and the fraction of people forgoing treatment because it is too expensive. Then we describe the Eurostat data that we use. We explain the empirical model that we estimate. Estimation results are presented for the baseline model and we show that these are robust with respect to a number of our modeling choices. We conclude with a discussion of the policy implications. The appendix contains the proofs of our results and more details on our data and estimation. The online appendix is the html version of this paper which includes –per section– the python code that is used in each section’s analysis.1 This is a final advantage of using data at the regional level. The repository contains the python code that gets the data from Eurostat so that each step of this analysis can be replicated. The data used for this paper can be downloaded from DataverseNL (Boone 2022).

1.1. Map code

As we use a different python kernel here from the one used in the rest of the paper (due to conflicting supporting packages at the time of the analysis), we need to import some libraries and the data again. We use geopandas to plot the map of the NUTS 2 regions where the color indicates mortality per 100k population.

import numpy as np import geopandas as gpd import matplotlib.pyplot as plt plt.style.use('Solarize_Light2') import pandas as pd # import altair as alt

# read the NUTS shapefile and extract # the polygons for a individual countries nuts=gpd.read_file('./SHP/NUTS_RG_60M_2021_4326_LEVL_2.shp') age_min = 35 age_max = 85 plot_age = np.arange(age_min,age_max+1) first_year = 2009 last_year = 2019 df = pd.read_csv('./data/data_deaths_by_age_nuts_2.csv') df.dropna(subset=['deaths','population', 'TOOEXP',\ 'HF3_PC_CHE','lagged_mortality'], axis=0, how ='any',inplace=True) df = df[(df.population > df.deaths) & (df.age >= age_min) & \ (df.age <= age_max) & (df.year <= last_year) &\ (df.year >= first_year)] df['mortality'] = df.deaths/df.population*100000 df = df[(df.year==2018) & (df.age==40) & (df.sex=='F')] nuts = nuts.to_crs(epsg=3035) nuts['centroids'] = nuts.centroid nuts = nuts.merge(df, how='inner',\ left_on = 'NUTS_ID', right_on = 'nuts2')

nuts[nuts.sex=='F'].plot(column='mortality', legend=True, figsize=(16,16), # vmin = 71, vmax = 0.002*100000, missing_kwds={'color': 'lightgrey'}, legend_kwds={'label': "Mortality rate", 'orientation': "vertical"}) # adjust plot domain to focus on EU region plt.xlim(0.25e7, 0.6e7) plt.ylim(1.3e6, 5.5e6) plt.xticks([],[]) plt.yticks([],[]) plt.title(\ 'Mortality 40 year old females in 2018 (per 100,000 population)'); # plt.tight_layout() # plt.legend('right');

2. Theory

The relevant variables in our data are mortality per region/year/age/sex category, the percentage of healthcare expenditure paid out-of-pocket (oop), the poverty rate and the fraction of people per region postponing or forgoing treatment because it is too expensive. We introduce a model to explain how these variables are related. Then we discuss what variables are missing from the model potentially causing confounding effects.

2.1. simple model

Consider a population (of a certain age and gender in a particular year) in an EU region where a fraction \(\alpha \in \langle 0,1 \rangle\) has low income \(y^l\) and fraction \(1-\alpha\) high income \(y^h\). We think of \(\alpha\) as the poverty rate. Let \(\pi^j\) denote the probability that someone with income \(y^j, j=l,h\) falls ill. As is well known, low income people tend to have a lower health status (Cutler, Lleras-Muney, and Vogl 2011). We capture this by assuming \(\pi^l > \pi^h\). People on low income may have a less healthy diet, exercise less etc. due to either the cost or knowledge of healthy lifestyle choices. This makes it more likely that they fall ill. Thus we separate the direct health effect of income (\(\pi^l > \pi^h\)) from treatment decisions made by people on low income.

Generally speaking, oop payments tend to take two forms that we want to capture: a coinsurance rate, which we denote \(\xi \in [0,1]\), and a maximum expenditure, which we denote \(D\) (for deductible). Some systems have a combination of the two.

Conditional on falling ill, there is a probability \(\zeta_i \in [0,1]\) that the patient is advised to get treatment \(i\) at cost \(x_i\) for \(i\) in the set of “illnesses” \(I\) with \(\sum_{i \in I} \zeta_i =1\).2 We define \(I_{\xi}\) as the subset of \(I\) where \(\xi x_i < D\) and \(oop_i = \xi x_i\) and set \(I_D\) where \(\xi x_i \geq D\) and \(oop_i = D\). To keep things simple, we assume that \(\zeta_i\) is exogenous to the patient. We model the treatment decision on the extensive margin only: an agent accepts or rejects the treatment proposed by a physician.3 A pure coinsurance system has \(\xi < 1\) and \(I_{\xi}=I\). A pure deductible system \(\xi=1\) and \(I_D\) non-empty. A combination of the two has \(\xi<1\) and there is a maximum on the oop payment. Health insurance systems in Europe tend to have such maximum oop expenditure.4 An increase in either \(\xi\) or \(D\) is interpreted as making health insurance less generous.

Whereas with individual level data one can determine whether an individual faces a positive treatment price at the margin (E.g. using the end-of-year price as in Keeler, Newhouse, and Phelps 1977; Ellis 1986), this is not possible with the aggregate data that we use here. Hence, we rely on an aggregate summary variable, denoted OOP, measured as oop payments over total healthcare expenditure. That is, the fraction of healthcare expenditure paid by patients oop. We interpret this variable as capturing the generosity of the health insurance system. To illustrate, if healthcare is free at point of service, OOP equals zero; if there is no health insurance at all, OOP equals 1. In a pure coinsurance system with rate \(\xi\) applying to all treatments, OOP equals \(\xi\). It is the cap on oop expenditure (like a deductible) that makes the relation between OOP and healthcare use non-linear. The challenge then is to capture changes in \(\xi\) and \(D\) although we do not directly observe these variables in the data. This is what the model sets out to do.

If an ill patient receives treatment, we denote her (expected) health \(\sigma\), while without treatment (expected) health equals \(\sigma_0\) with \(0 < \sigma_0 < \sigma < 1\).5 Health is normalized at value one for a patient who does not fall ill. The trade off between health and oop is captured by \(\sigma_0/\sigma <1\) and a simple assumption that utility is multiplicative in health and consumption. That is, consumption yields higher utility if you are healthier. To put it bluntly, if you are healthy and can travel, go skiing etc. consumption yields higher utility than when you are ill, lying in bed all day. We model the patient’s treatment decision as:

\begin{equation} \label{orgff195e9} \nu \sigma u(y^j-oop_i) > \sigma_0 u(y^j) \end{equation}where utility \(u(.)\) is determined by how much money can be spent on other goods: income \(y^j\) minus oop in case of treatment and \(y^j\) if no treatment is chosen. The utility function \(u(.)\) is increasing and concave in consumption: \(u(.), u'(.) >0\) and \(u''(.) < 0\). Further, parameter \(\nu\) captures other factors than pure financial ones affecting a patient’s treatment choice.6 If the inequality holds, the patient accepts treatment \(i\).

In our data, we have a variable “unmet medical needs” based on a number of motivations: treatment is too far away to travel to, there is a long waiting list, the patient is scared to undergo treatment etc. To make our point, it is enough to assume that such factors affect utility in a multiplicative way. To illustrate, if the patient has to travel far for treatment, utility is reduced by multiplying it with a value of \(\nu < 1\). Agents differ in \(\nu\) and the cumulative distribution function of \(\nu\) is given by \(G(\nu)\), its density function by \(g(\nu)\). Other factors can include waiting time till treatment, belief that the condition will resolve itself without intervention, poor decision making e.g. with a focus on the short term thereby undervaluing the benefit of treatment.

The probability that a patient with income \(y^{j}\) accepts treatment \(i\) offered by a physician equals

\begin{equation} \label{orgacdd769} \delta_i^j = 1-G\left( \frac{\sigma_0}{\sigma} \frac{u(y^{j})}{u(y^{j}-oop_i)} \right) \end{equation}that is, \(\nu\) is big enough that inequality \eqref{orgff195e9} holds. With probability \(G\left( \frac{\sigma_0}{\sigma} \frac{u(y^{j})}{u(y^{j}-oop_i)} \right)\) the patient decides to postpone or forgo treatment \(i\).

The probability that a patient postpones or skips a treatment because it is too expensive is given by

\begin{equation} \label{org79aac8e} G\left( \frac{\sigma_0}{\sigma} \frac{u(y^{j})}{u(y^{j}-oop_{i})} \right) - G\left( \frac{\sigma_0}{\sigma} \right) \end{equation}These are agents \(\nu\) that would have chosen treatment \(i\) if it were free (\(oop_{i}=0\) and \(u(y^j)/u(y^j-oop_i)=1\)) but who forgo treatment now that it costs \(oop_{i}>0\). The probability \(G(\sigma_{0}/\sigma)\) captures factors like waiting lists or the patient hoping that the health problems resolve themselves without treatment. That is, reasons for postponing treatment not related to oop payments.

In the proof of the lemma below, we show that the probability of accepting treatment, \(\delta_i^j\), is increasing in income \(y^j\) and decreasing in \(oop_{i}\), as one would expect.

Note that this model differs from a standard Rothschild and Stiglitz –R&S– health insurance model (Rothschild and Stiglitz 1976) in the following way. In an R&S model income plays no role and people with low health status have generous insurance coverage. Hence, they would not postpone valuable care. In our model, people with low health tend to have low income and may skip valuable treatment if the oop expense is high. This negatively affects their health.

In Appendix 9 we specify how (unobserved) health and treatment decisions translate into (observed) mortality for each age/gender class.

In our data, the variable Unmet varies with NUTS 2 region and year. In terms of our model, we define this variable with subscript \(2\) for region and \(t\) for calendar year as follows:

with treatment probability \(\delta^j_i\) for illness \(i \in I\) and income class \(j \in \{l,h\}\) varying with country \(c\) and year \(t\) because oop varies with countries over time. In words, for people on low [high] income –fraction $α2t [1-α2t]$– there is a probability \(\zeta_i \pi^l [\zeta_i \pi^h]\) of falling ill with disease \(i\) where they forgo treatment with probability \(1-\delta_{ict}^l [1-\delta_{ict}^h]\).

Further, in our data we have the variable OOP defined as oop payments as a percentage of healthcare expenditure. In terms of our model, we write this –ignoring subscripts– as

where \(\zeta_i (\alpha \pi^l \delta^l_i + (1-\alpha) \pi^h \delta^h_i)\) denotes the fraction of people accepting treatment \(i\). If people do not accept treatment, there is no oop and no expenditure. The numerator of OOP contains the oop payments \(oop_{i}\) and the denominator expenditures \(x_i\). If \(I_{\xi} = I\), it is clear that \(\text{OOP} = \xi\). Because \(I_D\) is non-empty (European countries have a maximum oop payment), the expression for OOP is actually non-trivial. We can also write OOP as the ratio of average oop per head and average healthcare expenditure per head:

In our data these variables vary by country \(c\) and year \(t\).

The following lemma summarizes the main results from the model and presents the equations for mortality \(m_{ag2t}\) varying with age, gender, NUTS 2 region, calendar year and the fraction of people forgoing treatment because it is too expensive, \(\text{TooExp}_{2t}\) that we estimate below. Further, our simulation results are presented in terms of the relative increase in deaths due to the increase in oop, \(dm_{ag2t}/m_{ag2t}\). In the lemma we use the following indices: age \(a\), gender \(g \in \{f,m\}\), country \(c\), NUTS 2 region \(2\), calendar year \(t\)

Healthcare demand \(\delta = 1-G(.)\) is increasing in income \(y^j\) and decreasing in \(oop_i\) (\(\xi\) or \(D\)).

We write the expression for mortality of age cohort a and gender g in NUTS 2 region 2 at time t as:

\[

m_{ag2t} = \frac{e^{\beta_{ag}}}{1+e^{\beta_{ag}}} e^{\left( \mu_2 + \gamma \ln \left(\frac{m_{a-1,g,2,t-1}}{\bar{m}_{a-1,g}}\right)+ \beta_{poverty}\text{Poverty}_{2t} + \beta_{unmet}\text{Unmet}_{2t}\right)}

\]

where \(\beta_{poverty}, \beta_{unmet} > 0\).

The linear expansion of TooExp with respect to OOP can be written as

\[

\text{TooExp}_{2t} = b_{0,2} + b_{0,t} + \text{OOP}_{ct} \bar{x}_{ct} \left( b_{oop,c} + b_{interaction,c} \text{Poverty}_{2t} \right)

\]

where \(b_{oop,c},b_{interaction,c}>0\).

Finally, the mortality effect of a 500 euro increase in oop can be written as:

\begin{eqnarray*} \frac{dm_{ag2t}}{m_{ag2t}} &= \beta_{unmet} \text{TooExp}_{2t}(1-\text{TooExp}_{2t}) 500 \times \\ & (b_{oop,c}+b_{interaction,c} Poverty_{2t}) \end{eqnarray*}As derived in the appendix, mortality can be written as the multiplication of an age/gender effect with a factor depending on the situation in the NUTS 2 region. We think of the age/gender effect as biology that is the same across regions. This is modeled as a sigmoid of age and gender fixed effects, \(\beta_{ag}\), which makes sure the probability of death is between 0 and 1. We multiply this baseline probability with a multiplier capturing the other effects.

First, NUTS 2 region fixed effects, \(\mu_2\), which capture regional variation in the probability of falling ill. One can think of lifestyle habits that vary by region, external factors affecting health like clean air, road safety, travel distance to closest medical facilities that tend to be longer in rural areas etc.

Second, whether this age \(a\) cohort experienced a health shock in the previous period \(t-1\) when aged \(a-1\). If there was such a negative health shock that increased mortality, we expect that part of this shock spills over in the current period further increasing mortality. We measure the health shock as mortality for this group in the previous period compared to average mortality for this group (across regions and time).

Third, the region’s poverty level and the fraction of people with unmet medical needs in the region in year \(t\) affect mortality. As shown in the proof of the lemma, \(\beta_{poverty} = (\pi^l - \pi^h)(1-\sigma) > 0\): if there are no unmet needs, poverty still raises mortality as poor people fall ill more often (\(\pi^l-\pi^h >0\)) and treatment only partially recovers their health (\(1-\sigma>0\)). This is the health effect of low income. Further, \(\beta_{unmet}=(\sigma-\sigma_0)>0\): with unmet medical needs, patients end up with lower health than they would have gotten with treatment (\(\sigma_0 < \sigma\)). In words, we expect mortality (in a region in year \(t\)) to be higher (for a given age/gender category) if poverty is higher and there are more people with unmet medical needs.

If the sum of these three terms is negative, the multiplier is less than 1 and mortality for this age/gender/region/year combination is reduced compared to the baseline probability given by the sigmoid. If the sum of the terms is positive, mortality for this observation is higher than the baseline probability.

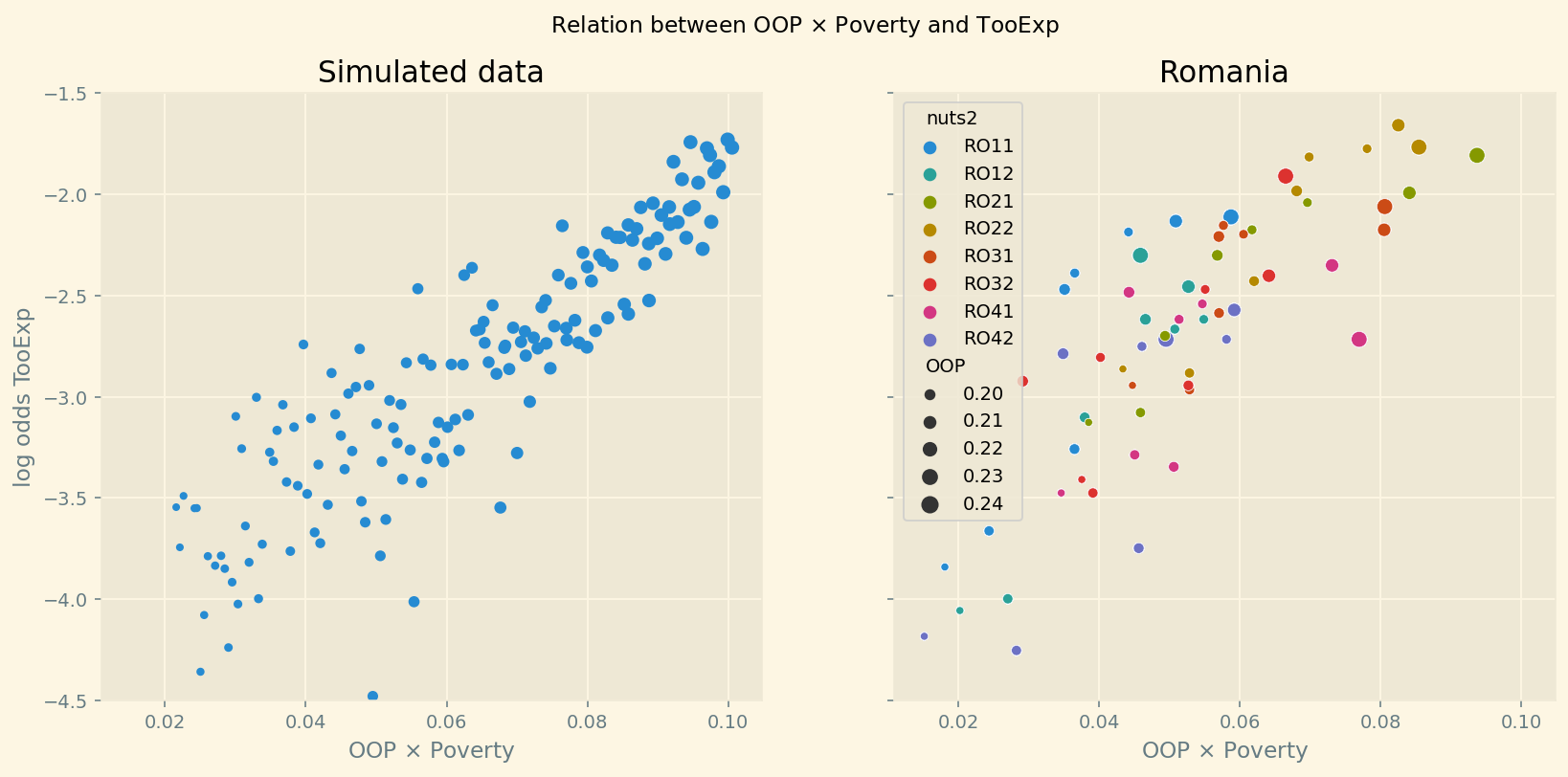

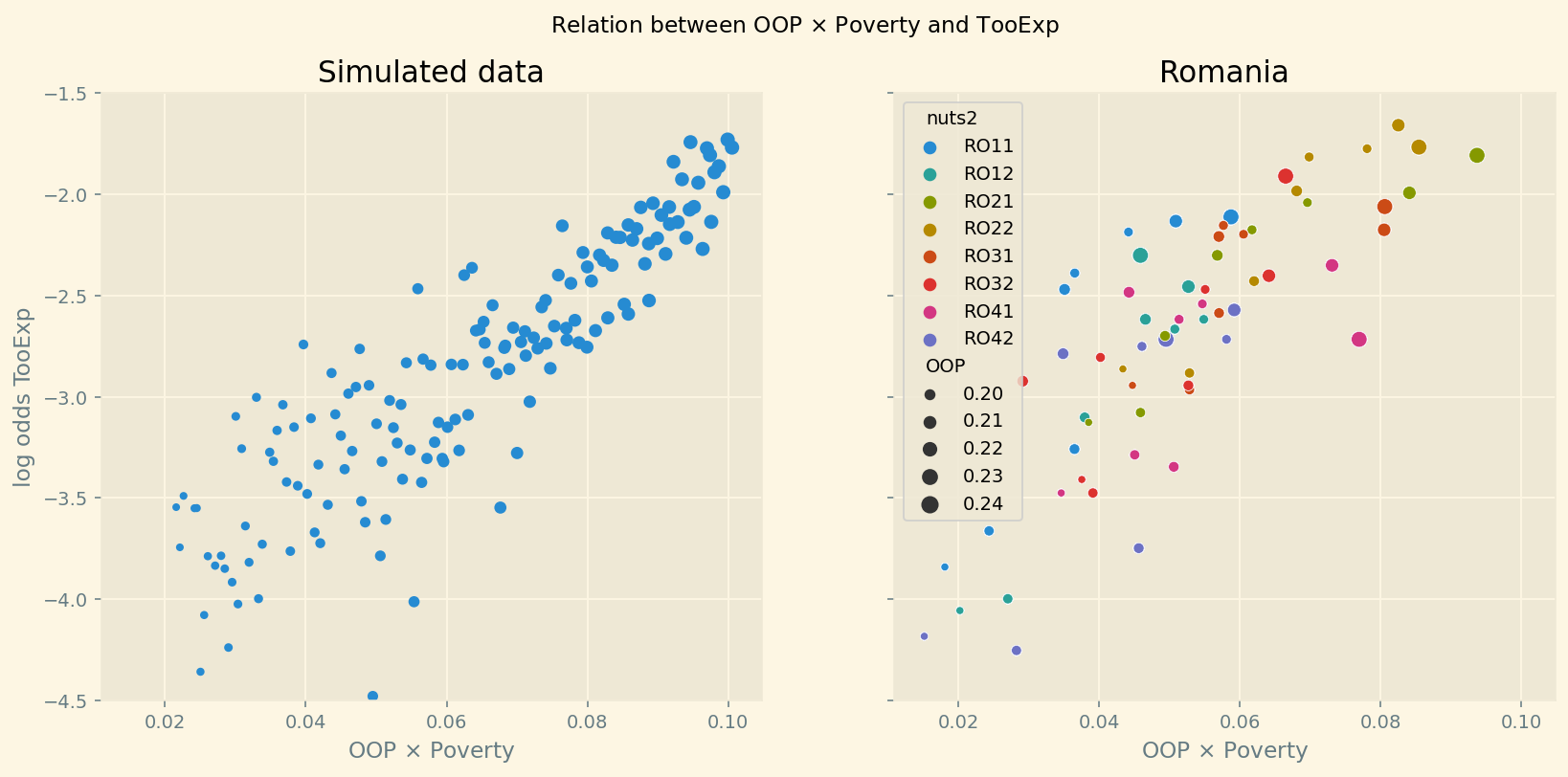

For the second equation in the lemma, we use a linear expansion of TooExp in terms of OOP. The appendix shows how we derive this relation using the policy variables \(\xi\) and \(D\) which affect OOP and TooExp simultaneously. It turns out that there is a direct effect of OOP on TooExp and an interaction effect with the fraction of people below the poverty line in a region. We show that \(b_{oop,c},b_{interaction,c} > 0\): a region that lies in a country with high OOP tends to have high unmet needs (as medical care is expensive) and especially so if the region features a high poverty rate. On the other hand, if OOP equals 0 (healthcare is free at point-of-service) one does not expect poverty to affect TooExp (it will still affect health and mortality through lifestyle choices).

The third equation shows how a 500 euro increase in oop affects mortality for an age/gender category in a NUTS 2 region in year \(t\). In our simulations we present this as the increase in mortality per 1000 deaths. The mortality effect of demand-side cost-sharing goes via unmet medical needs. People fall ill and cannot afford the treatments recommended to them by a physician. This reduces their health status and affects the probability of dying.

As shown below, in our data \(TooExp\) is smaller than 0.5 in all regions. Hence, we see that the mortality effect \(dm/m\) increases with the fraction of people that forgo treatment because it is too expensive. This suggests the following non-linearity in the effect of oop: if demand-side cost-sharing is initially low, \(TooExp\) is low and an increase in oop hardly affects mortality, but in countries where oop is already significant and \(TooExp\) is high a further increase in oop has serious mortality consequences. Finally, an increase in oop increases the fraction of people with unmet medical needs because treatment is too expensive and especially so in regions where poverty is high. The policy implications of this are clear: in a country where oop is already high and where poverty is a serious problem, the government should be careful increasing oop further.

Note that the increase in the number of deaths \(dm_{ag2t}/m_{ag2t}\) is independent of age. This is due to our formulation of mortality where we have a baseline mortality depending on age/gender only and a deviation from this baseline based on poverty and unmet medical needs in the region.

2.2. confounding effects

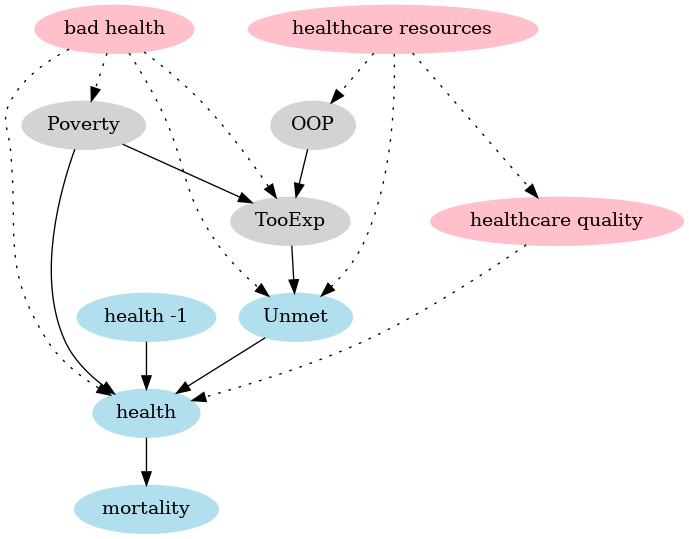

The model sets the stage for the empirical analysis in two ways: (i) it helps us specify functional forms, (ii) it helps us to avoid “causal salad” (McElreath 2020). Because the model is clear on the mechanisms that are covered, we can also identify potential mechanisms that are missing which can confound our estimates. This is illustrated with a Directional Acyclic Graph, DAG (Pearl 2009; Hernán and Robins 2023). The arrow points from the node that has a causal effect to the node that is affected.

Figure 3: DAG of the model (in blue and grey with solid arrows) and confounding effects (in red, dashed arrows).

The grey nodes capture the model equation where poverty and OOP (and their interaction which is not separately depicted in a DAG) affect the fraction of people that forgo treatment because it is too expensive. The blue nodes capture the mortality equation where TooExp affects (because it is part of) people reporting unmet medical needs, poverty affects health and previous period health (health -1) affects today’s health. Finally, health determines mortality.

The DAG clearly shows two causal paths from poverty to mortality. First, poverty is directly associated with lower health, say due to the financial stress involved of living on low income and due to less healthy lifestyle choices, e.g. because fresh fruit and vegetables tend to be expensive. Second, poverty makes it more likely that people forgo valuable treatments leading to unmet medical needs; especially when OOP is high.

Some variables causing differences between regions that are more or less constant over time –think of lifestyle (smoking habits), pollution in the region etc.– are not explicitly mentioned in the DAG but are captured by region fixed effects in the model.

Effects are potentially confounding if they differ between regions, vary over time (not captured by fixed effects) and simultaneously affect both unmet needs and mortality (in the mortality equation) or both TooExp and the interaction OOP \(\times\) poverty (in the TooExp equation). In the robustness analysis, we focus on two plausible mechanisms that can lead to these effects over time: shocks to healthcare resources and to health itself.

First, consider a shock that reduces government resources available to finance healthcare in the region or country. If this increases waiting lists due to reduced healthcare capacity, Unmet is likely to rise. At the same time, this can also reduce the quality of care, say because equipment maintenance is reduced, equipment is replaced less often or due to wage cuts high quality physicians leave and are replaced by lower quality staff. This reduction in care quality can affect health and mortality introducing a different mechanism from the one we focus on; that is, we have a causal path (indicated with dotted lines) via the red node healthcare quality that goes outside the model.

Note that a shock to government resources which raises poverty (due to reduced social assistance) and forces the government to raise out-of-pocket expenditure (say, by raising the deductible) is not a confounding effect. Indeed, the model captures that due to this shock the fraction of people that forgo treatment because it is too expensive goes up. Further, this increase in TooExp raises the fraction of people with unmet medical needs which will affect health and ultimately mortality. Unlike the healthcare quality example above, here all causal paths are within the model (going through gray and blue nodes).

Second, a health shock (causing a higher fraction of people with bad health) can increase both unmet medical needs (as more people fall ill, more people can have unmet needs) and increase mortality. This is partly captured by previous year mortality in the equation for \(m_{ag2t}\) in the lemma but in the current year this effect is depicted by the dotted arrow from (red) bad health to unmet needs and mortality (via health). This (current year) effect goes outside the model and can potentially confound the effect that we find. In the same vein, a health shock can increase the fraction of people that forgo medical treatment because it is too expensive and can raise poverty through reduced productivity. Again this is a potentially confounding effect outside of the model. Although our data do not include the Covid years, we cannot exclude the possibility of other shocks that tend to either reduce health and raise mortality or increase poverty and the fraction of people forgoing treatment because it is too expensive.

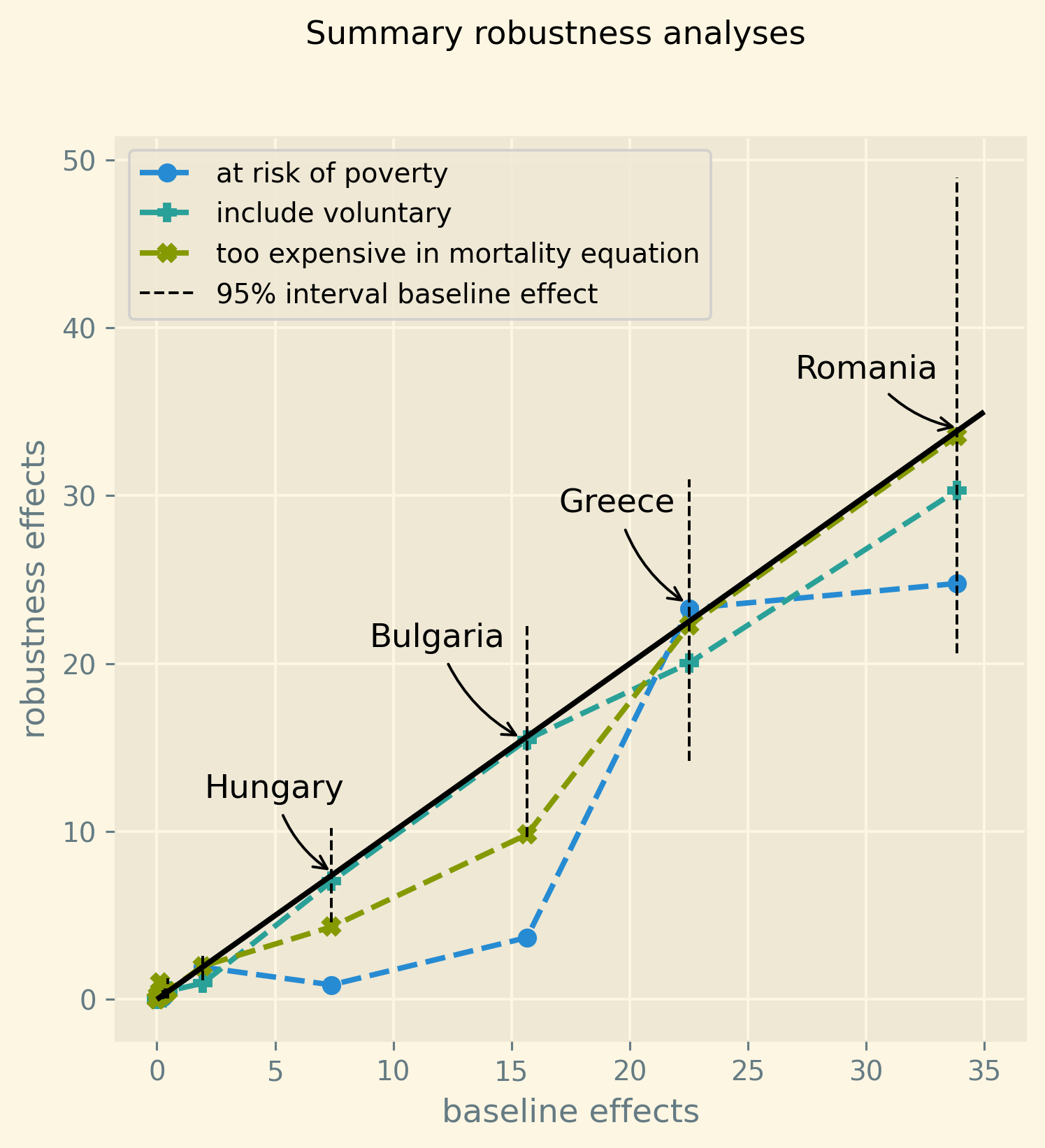

In the robustness section we introduce variables to control for these potentially confounding healthcare quality and health effects. Then we compare our baseline estimate of the OOP effect with the effect that follows from these equations with an extended variable set.

2.3. DAG code

In this section we use the graphviz library to plot the DAG for our model.

g = gr.Digraph() g.attr('node',color='lightgrey', style='filled') g.edge("OOP", "TooExp") g.edge("Poverty", "TooExp") g.attr('node',color='lightblue2', style='filled') g.edge("Unmet", "health") g.edge("health -1", "health") g.edge("health", "mortality") g.edge("TooExp", "Unmet") g.edge("Poverty", "health") g.attr('node',color='pink', style='filled') g.edge("bad health", "TooExp", style="dotted") g.edge("bad health", "Unmet", style="dotted") g.edge("bad health", "health", style="dotted") g.edge("bad health", "Poverty", style="dotted") g.edge("healthcare resources", "Unmet", style="dotted") g.edge("healthcare resources", "OOP", style="dotted") g.edge("healthcare resources", "healthcare quality", style="dotted") g.edge("healthcare quality", "health", style="dotted") g.render("./figures/DAG",format="png")

figures/DAG.png

3. Data

The data that we use is from Eurostat’s regional database and provides for NUTS 2 regions population size and number of deaths per age-gender category. In principle, we have data on 14 countries and 78 regions for the years 2009-2019, ages 35-85 for women and men. The years 2009-2019 were chosen because, at the time of the analysis, data on poverty was available from 2009 onward and data on the number of deaths ran till 2019. Further, we want to exclude the corona years which were exceptional in terms of mortality. We start at age 35 because at ages below 35, mortality is so low that there is hardly a difference between mortality in regions with different poverty levels (see Figure 4 below). For ages above 85 population numbers per region get rather low.

We drop NUTS 2 region-year combinations where for an age-gender category –due to reporting issues or people moving– the number of deaths in a year exceeds the population size at the start of the year. We focus on observations where we have complete records on mortality, the fraction of people indicating they postponed treatment because it was too expensive and oop expenditure.

Table 1 shows the summary statistics for our variables. We briefly discuss the main variables, the appendix provides more detail. We have more than 50k observations.7 The average population size per region-age-gender category is about 7500 and the average number of deaths 100. Median population size per category equals 6500 and median number of deaths 56. In our data, the percentage of people dying in a NUTS 2/year/age/gender category (mortality) equals 2% on average with a maximum of 20% for some region and age combination.

| count | mean | std | min | median | max | |

|---|---|---|---|---|---|---|

| population | 52612 | 7491.3 | 4805.3 | 440 | 6477 | 36117 |

| deaths | 52612 | 103.2 | 126.5 | 0 | 56 | 1033 |

| mortality (%) | 52612 | 2.1 | 2.9 | 0 | 0.8 | 20.7 |

| poverty (%) | 50878 | 16.5 | 6.6 | 2.6 | 15.3 | 36.1 |

| deprivation (%) | 52612 | 11.2 | 12.8 | 0 | 3.4 | 52.3 |

| too exp. (%) | 52612 | 2 | 3.1 | 0 | 0.6 | 16 |

| unmet (%) | 52612 | 5.8 | 4.1 | 0 | 4.8 | 20.9 |

| out-of-pocket (%) | 52612 | 22 | 8.9 | 8.8 | 19.5 | 47.7 |

| voluntary (%) | 52612 | 3.1 | 3.1 | 0.3 | 1.6 | 15.2 |

| expend. per head | 52612 | 3386.6 | 2691.3 | 307.7 | 3559.5 | 8484.9 |

| infant mortality (\textperthousand) | 52612 | 4.3 | 2.3 | 0.8 | 3.6 | 11.6 |

| bad health (%) | 52612 | 12.8 | 12.2 | 0.8 | 8.3 | 78.9 |

We use two measures for poverty; each of these measures comes from the EU statistics on income and living conditions (EU-SILC) survey. The first is “at-risk-of-poverty rate” that we refer to as poverty. This is a relative poverty measure: the share of people with disposable income after social transfers below a threshold based on the national median disposable income. The material deprivation measure (denoted deprivation) refers to the enforced inability to pay unexpected expenses, afford adequate heating of the home, durable goods like a washing machine etc.

In our data, the (unweighted) average (across regions and years) percentage of people at risk of poverty equals 16% with a maximum of 36%. For material deprivation the numbers are 11% and 52%. These measures vary by NUTS 2 region and year but not by age or gender. We use deprivation in our baseline analysis because it captures more closely the idea of postponing treatment due to financial constraints. The poverty variable is used in a robustness check.

Also from the EU-SILC survey, we use the variable capturing unmet medical needs because the forgone treatment was too expensive (too exp). The variable unmet measures percentage of people in need of healthcare that postpone or forgo treatment because it is either too expensive, the hospital is too far away, there is a waiting list for the treatment, the patient hopes that symptoms will disappear without treatment, the patient is afraid of treatment or has no time to visit a physician. As explained in the model above, our analysis uses both too exp and unmet (which includes too exp as reason for unmet medical needs) as variables.

The measure OOP that we use in the baseline model, is based on household oop payments (out-of-pocket). In particular, this measures the percentage of healthcare expenditures paid oop. This varies by country and year. The higher OOP, the less generous the healthcare system is (in terms of higher coinsurance \(\xi\) or deductible \(D\) in the model above). We expect that high OOP is especially problematic in regions with a high percentage of people with low income.

In a robustness analysis we consider the sum of oop and payments to voluntary health insurance (voluntary) as a percentage of health expenditures as our OOP measure. The reason why we also consider voluntary insurance is that basic or mandatory insurance packages can differ between countries. If people are willing to spend money on voluntary insurance, it can be the case that this voluntary insurance covers treatments that people deem to be important. Put differently, a country that finances all expenditure (“free at point of service”) for a very narrow set of treatments would appear generous if we only used oop payments. The narrowness of this insurance would then be signalled by people buying voluntary insurance to cover other treatments.

As can be seen in Table 1, out-of-pocket is the most important component of the two OOP inputs. Percentage of healthcare expenditure paid oop is a multiple of the percentage financed via voluntary insurance (both in terms of the mean and of the minimum, median and maximum reported in the table). Therefore, the baseline model works with oop payments (only).

As shown in Lemma 1, healthcare expenditure per head \(\bar x_{ct}\) (expend per head) affects how OOP influences the fraction of people forgoing treatment because it is too expensive. Expenditure per head is on average 3300 euro for the countries in our data. But the variation is big with a standard deviation of almost 2700 euro.

The last two variables are used in our analysis of confounding effects. Infant mortality is a well known measure of population health and healthcare quality (Health at a Glance 2023: OECD Indicators 2023). In contrast to measures like treatable and preventable mortality, infant mortality is not directly correlated with our mortality measure which considers people above age 35. If there is a negative shock in a year reducing the quality of care, we expect infant mortality to pick this up. It is defined as the number of deaths of infants (younger than one year of age at death) per 1000 live births in a given year.

Finally, bad health gives the percentage of people who answer bad or very bad when asked about their health status in the EU-SILC survey. Around 12% report self-perceived bad or very bad health and this ranges from less than 1% in some regions to almost 80% in others. This variable is used to control for health shocks over time as potential confounding effects. If healthcare quality deteriorates one would also expect more people indicating lower health status.

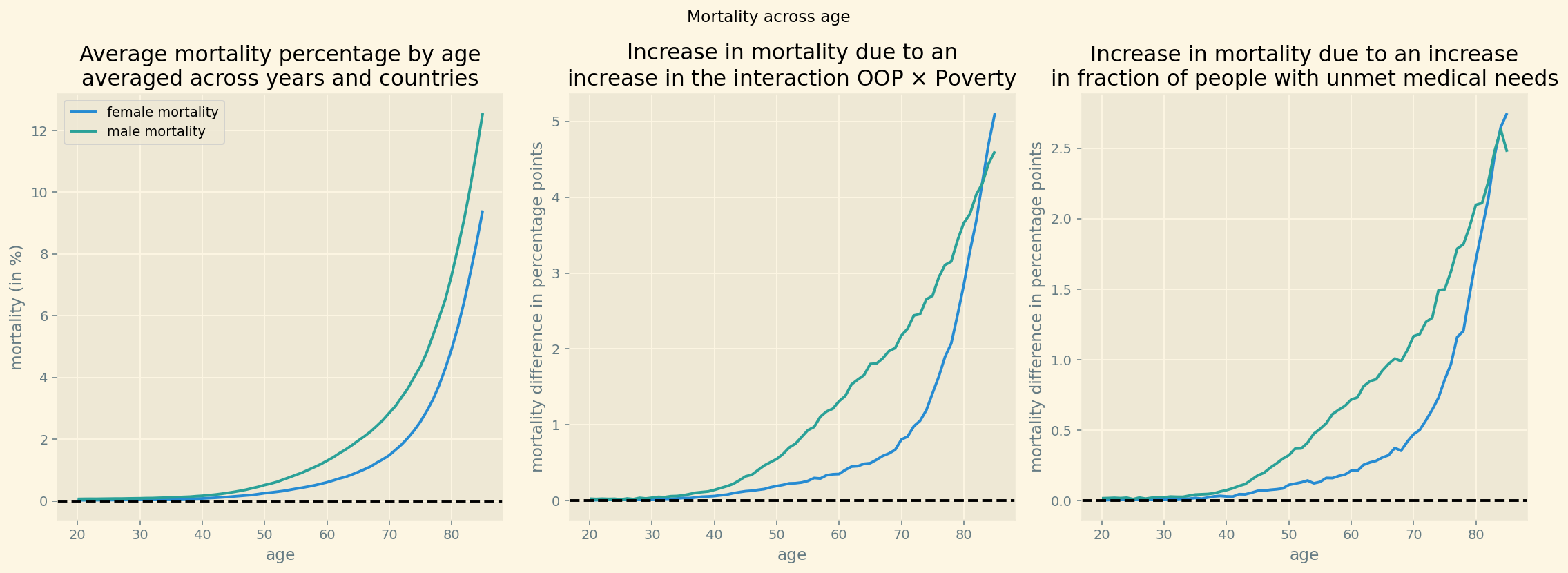

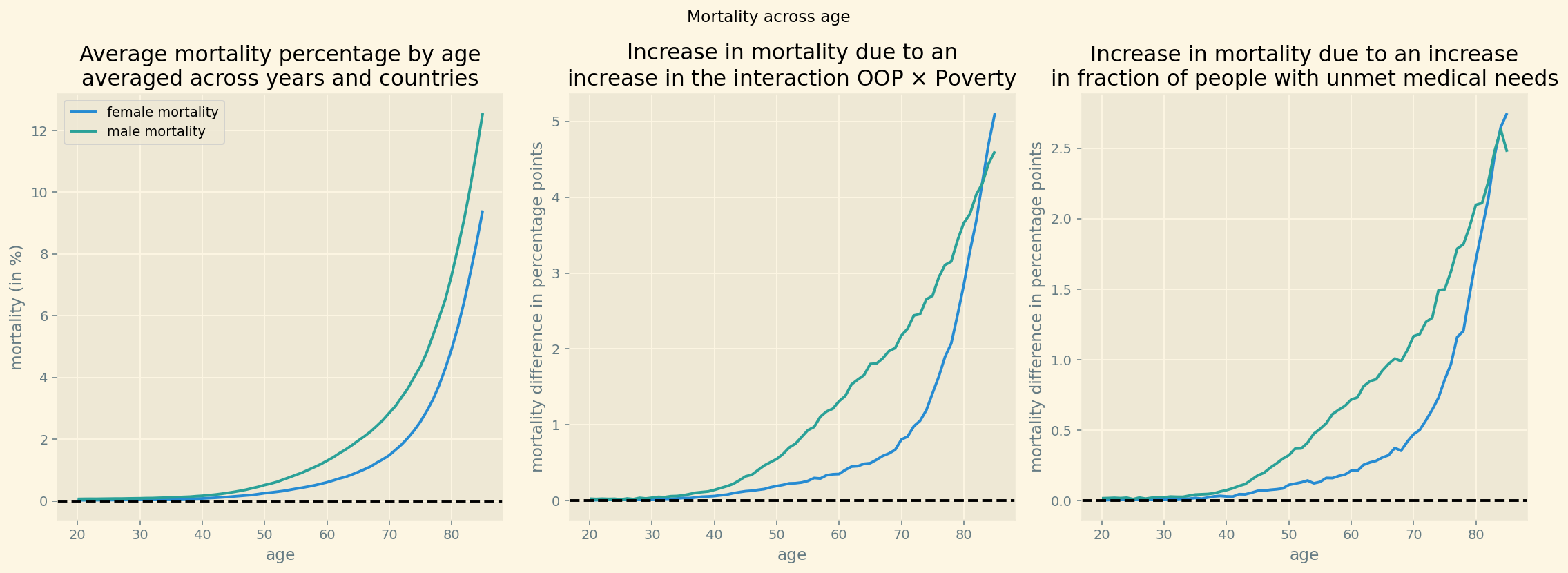

Figure 4 (left panel) shows average mortality as a function of age for women and men. This is the pattern that one would expect: clearly increasing with age from age 40 onward and higher for men than for women (as women tend to live longer than men). Figure 4 (middle panel) shows the effect we are interested in: mortality is higher in regions where the interaction OOP \(\times\) Poverty is high than where it is low and this difference increases with age.

Both for women and for men, we plot per age category the difference between average mortality in regions that are at least 0.5 standard deviation above the mean for OOP \(\times\) Poverty and regions that are at least 0.5 standard deviation below the mean. Around age 82, this mortality difference equals approximately 4 percentage points. In the raw data, for 100 (wo)men aged 82, there are 4 additional deaths in regions with high interaction OOP \(\times\) Poverty compared to regions with low interaction. Note that this plot of the raw data does not correct for other factors, like the poverty level itself, and thus over-estimates the effect of OOP \(\times\) Poverty on mortality. The right panel in this figure does a similar exercise with the fraction of people reporting unmet medical needs. Mortality is higher in regions where unmet needs are at least 0.5 standard deviation above the mean compared to regions where it is 0.5 standard deviation below the mean.

The observation from the figure that the difference between the two sets of regions is approximately zero for people below 35, is our motivation to include ages above 35 only in our data. Further, the difference in mortality between the regions increases with the mortality level in the left panel. This is in line with our specification in Lemma 1 where unmet needs has a multiplicative effect on the underlying (biological) mortality rate modeled by \(e^{\beta_{ag}}/(1+e^{\beta_{ag}})\).

Figure 4: Mortality and difference in mortality between regions with high and low interaction OOP \(\times\) Poverty and high and low unmet medical needs.

3.1. data code

The following python code generates Table 1 with summary statistics. The noweb notation <<code-preamble>> runs the code from Listing 2 into the python cell without using copy/paste.

<<code-preamble>> <<code-data>> headers = ['count','mean','std','min','median','max'] variables = df[['population','deaths','mortality',\ 'poverty',\ 'deprivation',\ 'TOOEXP','unmet',\ 'HF3_PC_CHE','HF2_PC_CHE',\ 'health expenditure per capita',\ 'infant mortality', 'bad_self_perceived_health']]\ .describe().T[['count','mean','std','min','50%','max']] variables = variables.round(1) variables['count'] = variables['count'].astype(int) variables.rename({'poverty':'poverty (%)',\ 'mortality':'mortality (%)',\ 'deprivation':\ 'deprivation (%)', 'HF2_PC_CHE':'voluntary (%)',\ 'HF3_PC_CHE':'out-of-pocket (%)',\ 'TOOEXP':'too exp. (%)',\ 'health expenditure per capita':\ 'expend. per head',\ 'unmet':'unmet (%)',\ 'bad_self_perceived_health':'bad health (%)',\ 'infant mortality':'infant mortality (\\textperthousand)'},inplace=True) print(tabulate(variables,headers,tablefmt="orgtbl"))

| count | mean | std | min | median | max | |

|---|---|---|---|---|---|---|

| population | 52612 | 7491.3 | 4805.3 | 440 | 6477 | 36117 |

| deaths | 52612 | 103.2 | 126.5 | 0 | 56 | 1033 |

| mortality (%) | 52612 | 2.1 | 2.9 | 0 | 0.8 | 20.7 |

| poverty (%) | 50878 | 16.5 | 6.6 | 2.6 | 15.3 | 36.1 |

| deprivation (%) | 52612 | 11.2 | 12.8 | 0 | 3.4 | 52.3 |

| too exp. (%) | 52612 | 2 | 3.1 | 0 | 0.6 | 16 |

| unmet (%) | 52612 | 5.8 | 4.1 | 0 | 4.8 | 20.9 |

| out-of-pocket (%) | 52612 | 22 | 8.9 | 8.8 | 19.5 | 47.7 |

| voluntary (%) | 52612 | 3.1 | 3.1 | 0.3 | 1.6 | 15.2 |

| expend. per head | 52612 | 3386.6 | 2691.3 | 307.7 | 3559.5 | 8484.9 |

| infant mortality (\textperthousand) | 52612 | 4.3 | 2.3 | 0.8 | 3.6 | 11.6 |

| bad health (%) | 52612 | 12.8 | 12.2 | 0.8 | 8.3 | 78.9 |

standardizing data

In our Bayesian estimation we work with standardized variables. To use fixed effects, we index variables by country and NUTS 2 indices, gender, year and age. These indices are generated with pandas’ factorize function.

All variables representing percentages are turned into fractions (between 0 and 1) and are not (further) standardized. Population size and number of deaths are not standardized either. Health care expenditure is standardized by dividing by its standard deviation using the function standardize defined below.

country_index, country_list = pd.factorize(df.country,sort=True) country_code_index, country_code_list = \ pd.factorize(df.country_code, sort=True) nuts2_index, nuts2_list = pd.factorize(df.nuts2,sort=True) nuts1_index, nuts1_list = pd.factorize(df.nuts1,sort=True) gender, gender_list =\ np.array(pd.factorize(df.sex,sort=True),dtype=object) year, year_list =\ np.array(pd.factorize(df.year,sort=True),dtype=object) age_index, age_list = \ np.array(pd.factorize(df.age,sort=True),dtype=object) N_countries = len(set(country_index)) N_nuts1 = len(set(nuts1_index)) N_nuts2 = len(set(nuts2_index)) N_age = len(set(age_index)) def standardize(x): return x/x.std()

The following cell standardizes variables as percentage in our data into fractions and transforms lagged mortality into logs, lagged_log_mortality, and fraction too expensive into log-odds, too_exp_lo. As mortality and TooExp can be zero for some observations, we clip these variables from below. The lower bounds imply probabilities of less than 1%. Neither of these variables is close to one in our data; hence the upper-bound is not relevant.

# dependent variable mortality = df.deaths.values population = df.population.values lagged_log_mortality = np.clip(\ (np.log(df['lagged_mortality_s'])),\ np.log(0.0001),np.log(10)) # nuts 2 measures standardized poverty_s = np.ma.masked_array(df['poverty'].values/100.0) deprivation_s = (df['deprivation'].values/100.0) oop_s = (df['HF3_PC_CHE'].values)/100.0 # only oop oop_e = (df['HF3_PC_CHE'].values+df['HF2_PC_CHE'].values)/100.0 # oop extended to include voluntary insurance too_exp = (df['TOOEXP'].values)/100.0 too_exp_lo = np.clip(np.log(too_exp/(1-too_exp)),np.log(0.0001),np.log(10)) unmet = (df['unmet'].values)/100.0 #log odds of TooExp # country measures expenditure_s = standardize(df['health expenditure per capita'].values) std_expenditure = np.std(df['health expenditure per capita']) N = len(mortality) # total sample size N_years = len(year_list)

Check on sample size, number of countries, regions etc.

<<code-preamble>> <<code-data>> <<code-indexing>> <<code-standardizing>> print("total sample size: {}".format(N)) print("number of countries: {}".format(len(country_list))) print("number of NUTS 1 regions: {}".format(len(nuts1_list))) print("number of NUTS 2 regions: {}".format(len(nuts2_list))) print("number of ages: {}".format(len(age_list))) print("number of years: {}".format(len(year_list)))

total sample size: 52612 number of countries: 14 number of NUTS 1 regions: 25 number of NUTS 2 regions: 78 number of ages: 51 number of years: 10

figure with extended age range

Next, we use a groupby to plot average mortality by age and gender. Further, we compare regions where the interaction OOP \(\times\) Poverty is at least 0.5 standard deviation above the mean with regions where it is at least this distance below the mean. For low (young) ages, there is hardly a difference, mortality is close to 0 for both sets of regions. But from age 40 onward, there is a clear difference which increases with age.

We can generate a similar figure with the (standardized) variable unmet: taking the difference in mortality between regions with unmet 0.5 standard deviation above the mean and 0.5 standard deviation below the mean.

<<code-preamble>> first_year = 2009 last_year = 2019 age_min_fig = 20 df_fig = pd.read_csv('./data/data_deaths_by_age_nuts_2.csv') df_fig['poverty'] = df_fig['at risk of poverty'] df_fig['deprivation'] = df_fig['percentage_material_deprivation'] df_fig.dropna(subset=['deaths','population', 'TOOEXP',\ 'HF3_PC_CHE','lagged_mortality'], axis=0, how ='any',inplace=True) df_fig = df_fig[(df_fig.population > df_fig.deaths) & (df_fig.age >= age_min_fig) & \ (df_fig.age <= age_max) & (df_fig.year <= last_year) &\ (df_fig.year >= first_year)] df_fig['mortality'] = df_fig.deaths/df_fig.population*100 df_groupby = df_fig[['country','age','sex','mortality']].\ groupby(['age','sex']).mean().reset_index()

plt.style.use('Solarize_Light2') fig, (ax1, ax2, ax3) = plt.subplots(1, 3,\ sharex=True,dpi=140,figsize=(16,6)) fig.suptitle('Mortality across age') ax1.plot(np.arange(age_min_fig,age_max+1),\ df_groupby[df_groupby.sex=='F'].mortality,\ label='female mortality') ax1.plot(np.arange(age_min_fig,age_max+1),\ df_groupby[df_groupby.sex=='M'].mortality, label='male mortality') ax1.legend() ax1.axhline(0,c='k',linestyle='dashed') ax1.set_xlabel('age') ax1.set_ylabel('mortality (in %)') ax1.set_title(\ 'Average mortality percentage by age\naveraged across years and countries'); oop_poverty = df_fig['HF3_PC_CHE'] * df_fig['deprivation']/(100*100) k = 0.5 mask_high_interaction = (oop_poverty > np.mean(oop_poverty) + k * np.std(oop_poverty)) mask_low_interaction = (oop_poverty < np.mean(oop_poverty) - k * np.std(oop_poverty)) groupby_high = df_fig[mask_high_interaction].\ groupby(['age','sex'])['mortality'].mean().reset_index() groupby_low = df_fig[mask_low_interaction].\ groupby(['age','sex'])['mortality'].mean().reset_index() mortality_difference_0_F = (groupby_high[groupby_high['sex']=='F'].\ mortality-groupby_low[groupby_low['sex']=='F'].mortality) ax2.plot(groupby_high[groupby_high['sex']=='F'].\ age,mortality_difference_0_F,label='female') mortality_difference_0_M = (groupby_high[groupby_high['sex']=='M'].\ mortality-groupby_low[groupby_low['sex']=='M'].mortality) ax2.plot(groupby_high[groupby_high['sex']=='M'].\ age,mortality_difference_0_M,label='male') ax2.axhline(0,c='k',linestyle='dashed') ax2.set_xlabel('age') ax2.set_ylabel(\ 'mortality difference in percentage points') ax2.set_title(\ 'Increase in mortality due to an\nincrease in the interaction OOP $\\times$ Poverty') unmet_fig = (df_fig['UNMET'])/100.0 mask_high_interaction = (unmet_fig > unmet_fig.mean() + k*unmet_fig.std()) mask_low_interaction = (unmet_fig < unmet_fig.mean() - k*unmet_fig.std()) groupby_high = df_fig[mask_high_interaction].\ groupby(['age','sex'])['mortality'].mean().reset_index() groupby_low = df_fig[mask_low_interaction].\ groupby(['age','sex'])['mortality'].mean().reset_index() mortality_difference_0_F = (groupby_high[groupby_high['sex']=='F'].\ mortality-groupby_low[groupby_low['sex']=='F'].mortality) ax3.plot(groupby_high[groupby_high['sex']=='F'].age,\ mortality_difference_0_F,label='female') mortality_difference_0_M = (groupby_high[groupby_high['sex']=='M'].\ mortality-groupby_low[groupby_low['sex']=='M'].mortality) ax3.plot(groupby_high[groupby_high['sex']=='M'].age,\ mortality_difference_0_M,label='male') ax3.axhline(0,c='k',linestyle='dashed') ax3.set_xlabel('age') ax3.set_ylabel(\ 'mortality difference in percentage points') ax3.set_title(\ 'Increase in mortality due to an increase\nin fraction of people with unmet medical needs') fig.tight_layout();

4. Estimation

In this section, we explain how we estimate the mortality and TooExp equations in Lemma 1.

4.1. Empirical model

The first equation estimates a binomial model with population size as the number of draws and deaths as the number of events. We do this for every combination of age, gender, NUTS 2 region and calendar year in our data. The probability of \(k \leq n\) deaths out of a population \(n\) is then given by

\begin{equation} \label{org8406f9f} \binom{n}{k} m^{k}(1-m)^{n-k} \end{equation}where \(m\) denotes mortality: the probability of death. The equation that we estimate for mortality \(m_{ag2t}\) is given in the lemma above. The coefficient we are especially interested in is \(\beta_{unmet}\). This is the coefficient through which an increase in unmet medical needs because of financial problems affects mortality.

Figure 4 illustrates that without the multiplicative specification for \(m_{ag2t}\) in the lemma, the coefficients for \(\beta_{unmet}, \beta_{poverty}\) would have to vary with age. Indeed, for the young mortality is low even in regions with high poverty or high unmet needs. Specifying coefficients that vary with age would considerably increase the number of parameters that we need to estimate.

The second equation captures how an increase in OOP affects the fraction of people in a region that postpone or skip treatment because it is too expensive. This fraction TooExp is based on (EU-SILC) survey data where we do not know the number of people interviewed. Hence, we cannot model this as a binomial distribution. In our estimation we want to ensure that TooExp is between 0 and 1. For this we assume that TooExp in the lemma above has a logit-normal distribution. That is, the log-odds of TooExp is normally distributed.

Details of the estimation can be found in Appendix 11.1 and the python code is in the online appendix.

4.2. Bayesian estimation

We use Markov Chain Monte Carlo (MCMC), in particular the NUTS sampler to explore the posterior distributions of our parameters. For this sampler, we have the guarantee that the whole posterior distribution is captured as long as we have enough samples. Although this is an asymptotic result, we are confident that drawing four chains of 2000 samples (1000 samples of which are used for tuning) is enough to cover the posterior distribution. In the appendix we discuss a number of checks on this convergence.

It is not straightforward to put priors on the coefficients of the two equations in Lemma 1. To illustrate, how strong is the reaction of mortality to a \(0.1\) increase in the fraction of people reporting unmet medical needs? We are not aware of previous studies looking into this and have no a priori information on the strength of this effect. We use three principles when setting priors. First, we use regularizing priors (“seat belt priors”): priors close to zero with small standard deviations. Hence, a coefficient differs from zero only if there is clear evidence for this in the data. This reduces the risk of over-fitting. Second, we use a hierarchical model to determine the parameters of the prior distributions. Finally, if the theory suggests a parameter is positive, the prior distribution reflects this (e.g. using a half-normal instead of a normal distribution). Details on the priors can be found in the appendix.

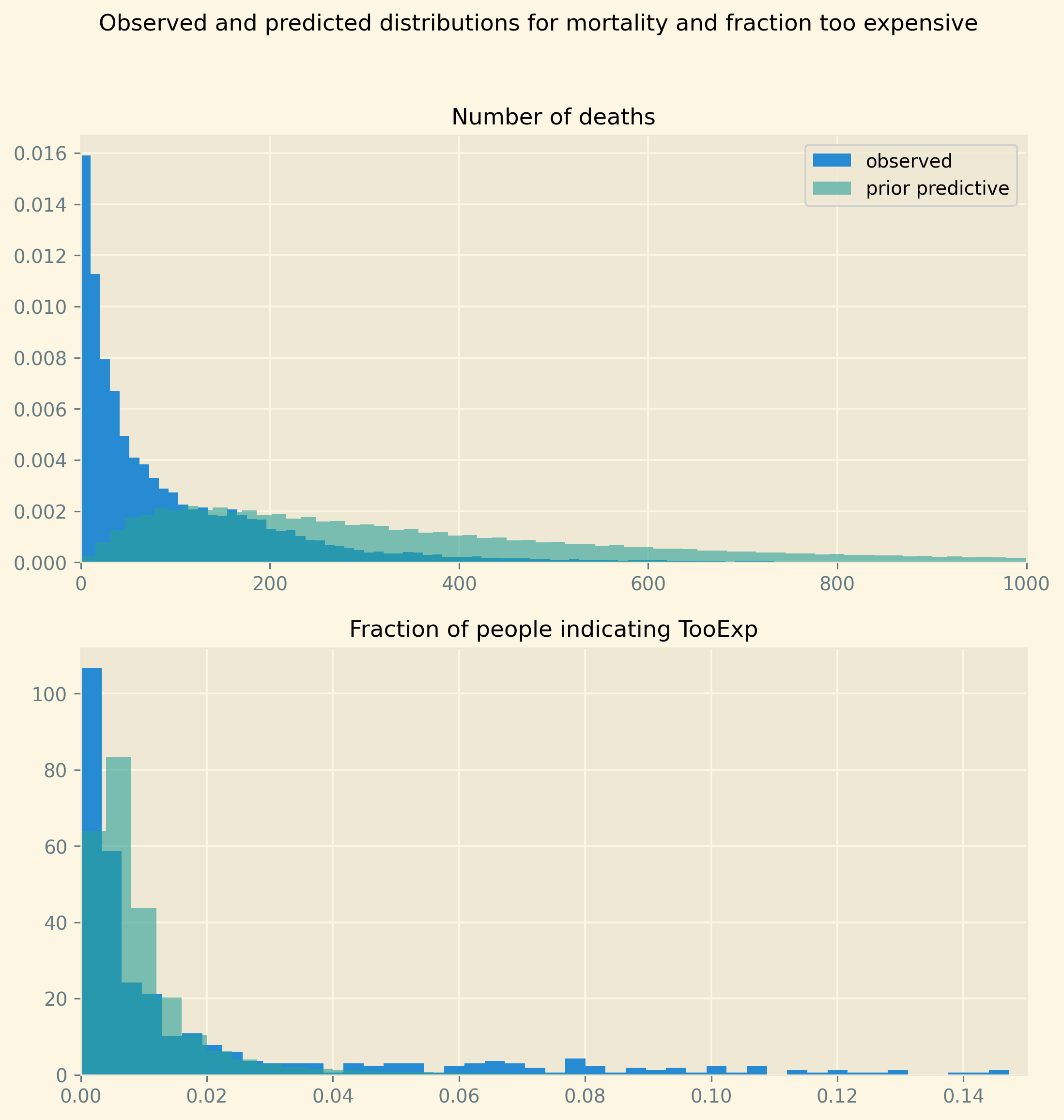

As it is hard to judge how sensible a prior for one particular coefficient is, the online appendix to this section shows the prior predictive distributions. That is, the predictions for mortality and TooExp that the model generates without having seen the data. Comparing the prior predictive distributions with the observed distributions, we show that our priors do not exclude relevant possible outcomes nor do they put (much) weight on unlikely outcomes (say, mortality close to 0.9).

Finally, as shown in Table 1, we have fewer observations for poverty than for deprivation. But in the robustness analysis where we use the variable poverty we do not want to change the sample. Appendix 11.2 explains how Bayesian estimation deals with missing values without imputation or dropping observations.

4.3. baseline model code

model

The function build_model below specifies our Bayesian model where the function has poverty and oop as arguments. These vary over deprivation in the baseline specification and at-risk-of-poverty in a robustness check; oop in the narrow sense of out-of-pocket payments (baseline) and the sum of oop payments and money spent on voluntary insurance for a robustness check.

We use named code blocks in the definition of build_model such that we can use the same code blocks in other models below without having to copy/paste code.

Setting priors for the fixed effects mu_2_too, mu_t_too, mu_2_m, beta_age is not so sensitive in the sense that we are not particularly interested in the values of these effects. They need to be broad enough to cover relevant values. Most fixed effects are centered at 0 with the exception of mu_2_too and beta_age which are centered to roughly capture the average values of TooExp and mortality. To illustrate, the mean of too_exp_lo equals \(-5.17\); hence, we choose mu for the region fixed effects mu_2_too equal to \(-5.0\).

For the coefficients b_oop, b_interaction and beta_lagged_log_mortality, beta_unmet, beta_poverty where we are interested in the size of the effects, choosing a prior is more subtle. As mentioned, we have no clear idea to which extent TooExp or the number of deaths are determined by factors outside of our model (captured by fixed effects) or by the variables that we do model. But we do believe that these effects will be comparable for different variables and across countries (for the TooExp equation).

Hence, we introduce the hyper-parameters sd_prior_b, sd_prior_beta to capture this. These parameters then feed into the priors for the b_ and beta_ parameters.

The beta_ coefficients enter the mortality equation in the multiplier term as \(e^{...}\) which can get big “very fast”. To avoid this term getting too big in sampling the posterior, we introduce an upper-bound on this term. The idea of pt.switch(pt.lt(x, 0.7),pt.exp(x),pt.exp(0.7*(x/0.7)**0.1)) is that when sampling, high draws of x do not cause \(m>1\) which would cause an error. Note that \(e^{0.7}= 2.0\) which is not a factor which we expect: twice as high mortality (for a given age/sex category) in one region compared to another. Hence over the relevant range we expect \(\lambda = e^x\) but when sampling we avoid high values for \(\lambda\) while avoiding a zero derivative which a function like clip() would give us. It turns out that in the posterior of x there are indeed very few values above 0.7 and we work with this term as if it is \(e^x\).

For parameters where the model clearly implies that they are positive, like \(b_{oop},b_{intercation}\), we use a HalfNormal distribution to specify the prior distribution.

Finally, we specify \(m\) as in Lemma 1. Then \(m\) is the probability in our Binomial distribution explaining the number of people that die out of population size population. As explained in the lemma, we start from a baseline age profile \(h = e^{\beta_{ag}}/(1+e^{\beta_{ag}})\) and then multiply this with a factor that varies around 1.

############################ ##code-model-too-expensive## ############################ sd_fixed_effects = 0.3 # hierarchical priors sd_prior_b = pm.HalfNormal('sd_prior_b', sigma = 0.1) σ = pm.HalfNormal('σ', sigma = 1.0) # Too Expensive equation ## NUTS 2 regional fixed effect: mu_2_too = pm.Normal('mu_2_too', mu = -5.0,\ sigma = sd_fixed_effects, dims="nuts2") ## time fixed effect: mu_t_too = pm.Normal('mu_t', mu = 0.0,\ sigma = sd_fixed_effects, dims="year") ## coefficients of the TooExp equation: b_oop = pm.HalfNormal('b_oop', sigma = sd_prior_b,\ dims="country") b_interaction = pm.HalfNormal('b_interaction',\ sigma = sd_prior_b, dims="country") mu_too_exp_lo = pm.Deterministic('mu_too_exp_lo', \ mu_2_too[nuts2_index] + mu_t_too[year] +\ expenditure_s * oop *\ (b_oop[country_index] +\ b_interaction[country_index] * poverty))

########################## ## code-model-mortality ## ########################## sd_fixed_effects = 0.3 # hierarchical priors sd_prior_beta = pm.HalfNormal('sd_prior_beta', sigma = 0.1) # Mortality equation ## age/gender fixed effect: beta_age = pm.Normal('beta_age', mu = -3.0,\ sigma = sd_fixed_effects,\ dims=("age","gender"),\ initval=-3*np.ones((N_age,2))) h = pm.Deterministic('h',pt.sigmoid(\ beta_age[age_index,gender])) ## NUTS 2 fixed effect: mu_2_m = pm.Normal('mu_2_m', mu = 0.0,\ sigma = sd_fixed_effects, dims="nuts2") ## coefficients of the mortality equation: beta_lagged_log_mortality = pm.Normal('beta_lagged_log_mortality',\ mu = 0, sigma = sd_prior_beta) beta_unmet = pm.HalfNormal('beta_unmet', sigma = sd_prior_beta) beta_poverty = pm.HalfNormal('beta_poverty', sigma = sd_prior_beta) mu_x = mu_2_m[nuts2_index] + beta_unmet*unmet +\ beta_poverty*poverty+\ beta_lagged_log_mortality*\ lagged_log_mortality

The following code block defines the function build_model using the code listings above.

######################### ## code-model-baseline ## ######################### <<code-header>> <<code-preamble>> <<code-data>> <<code-indexing>> <<code-standardizing>> lagged_log_mortality = np.asarray(lagged_log_mortality) unmet = np.asarray(unmet) coords = {"country":country_list, "nuts2":nuts2_list,\ "gender":gender_list, "age":age_list,\ "year":year_list} def build_model(poverty,oop): with pm.Model(coords=coords) as baseline_model: <<code-model-too-expensive>> ## equation for the log odds of TooExp Too_exp_lo = pm.Normal('Too_exp_lo', mu = mu_too_exp_lo,\ sigma = σ, observed = too_exp_lo) <<code-model-mortality>> x = pm.Deterministic('x', mu_x) ## combining h and x flat_exp = pt.switch( pt.lt(x, 0.7), # if pt.exp(x), # then pt.exp(0.7*(x/0.7)**0.1) # else ) mortality_function = h*flat_exp ## equation binomial distribution number of deaths: m = pm.Deterministic('m', mortality_function) obs = pm.Binomial("obs", p = m,\ observed=mortality, n = population) return baseline_model

prior predictive

The prior predictive runs the model without fitting it to the data. That is, we just run the prior distributions for the parameters and calculate an outcome for both of our dependent variables. The goal is to check that the samples from the prior predictive are in the same ballpark as our dependent variables. There is no need for the distributions to coincide; the model still needs to be estimated. But we do not want the priors to exclude values that actually appear in the data. Nor do we want the priors to put high probability on outcomes that are unlikely, if not impossible. To illustrate, although Europe has regions where poverty is high and health status low, we do not expect 80% of people to indicate they skipped treatment because it was too expensive.

The figure below shows that prior predictive basically covers the relevant range of values but still has a lot to learn from the data in terms of fitting the distributions for mortality and TooExp resp.

baseline_model = build_model(deprivation_s,oop_s) with baseline_model: baseline_prior_predictive = pm.sample_prior_predictive()

We use pp_ for the prior predictive samples for mortality and the fraction of people indicating they postponed treatment because it was too expensive.

pp_mortality = baseline_prior_predictive.prior_predictive.obs.values.flatten() pp_Too_exp = 1/(1+np.exp(-baseline_prior_predictive.prior_predictive.Too_exp_lo.values.flatten()))

plt.style.use('Solarize_Light2') fig, (ax1,ax2) = plt.subplots(2,1, dpi=280,figsize=(9,9),sharey=False) fig.suptitle('Observed and predicted distributions for mortality and fraction too expensive') ax1.hist(mortality,bins=100,density=True,label='observed') #sns.kdeplot(ax=ax1,data=pp_mortality) ax1.hist(pp_mortality,bins=500,density=True,alpha=0.6,label='prior predictive') ax1.set_xlim(0,1000) ax1.legend() ax1.set_title('Number of deaths', fontsize=12) ax2.hist(1/(1+np.exp(-too_exp_lo)),bins=50,density=True) ax2.hist(pp_Too_exp,bins=250,density=True,alpha=0.6); ax2.set_title('Fraction of people indicating TooExp', fontsize=12) ax2.set_xlim(0,0.15);

fit model and save trace

The following code samples from the posterior and then saves the trace to a file.

with baseline_model: idata_baseline = pm.sample(target_accept=0.85) pm.sample_posterior_predictive(idata_baseline, \ extend_inferencedata=True)

Output of the NUTS sampler: Auto-assigning NUTS sampler... Initializing NUTS using jitter+adapt_diag... Multiprocess sampling (4 chains in 4 jobs) NUTS: [sd_prior_b, σ, mu_2_too, mu_t, b_oop, b_interaction, sd_prior_beta, beta_age, mu_2_m, beta_lagged_log_mortality, beta_unmet, beta_poverty] 100.00% [8000/8000 2:50:11<00:00 Sampling 4 chains, 2 divergences] Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 10213 seconds. There were 2 divergences after tuning. Increase `target_accept` or reparameterize. 100.00% [4000/4000 00:51<00:00]

Out of 8000 samples, we have 2 divergences. This is low enough not to tune the algorithm further.

We save the samples to file:

idata_baseline.to_netcdf("./traces/baseline_model.nc")

./traces/baseline_model.nc

5. Results

In this section we present the results of the estimation of the baseline model. Before presenting the outcome of our estimation, we present two graphical checks of our model.

5.1. model fit

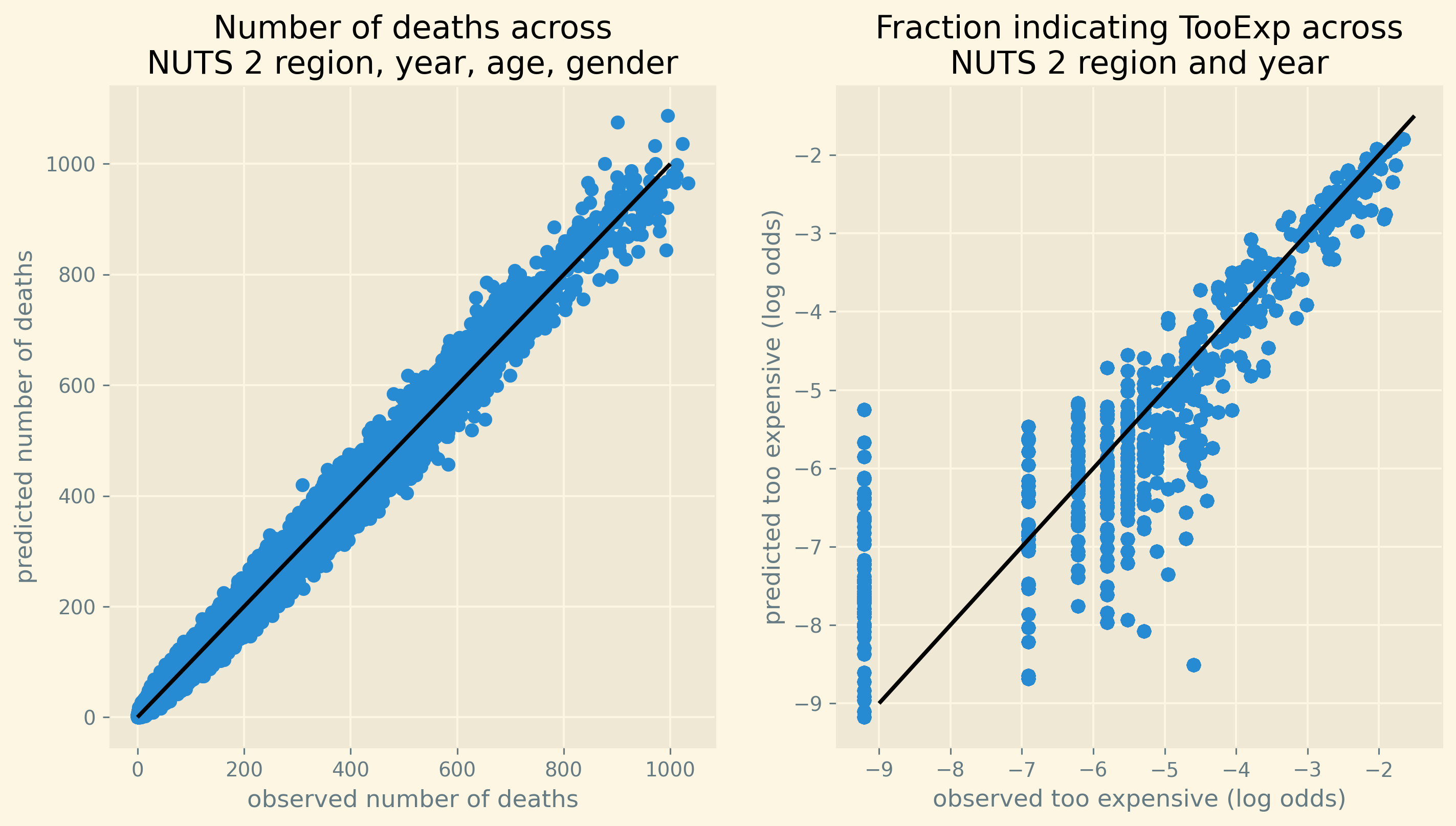

Figure 5 gives an idea of the fit of the model in terms of predicting deaths per age/gender/region/year category and the fraction of people postponing treatment because it is too expensive.

The left panel shows observed number of deaths per category on the horizontal axis and the posterior predictive for this on the vertical axis. For each row in our data, we have observed number of deaths and a distribution of predictions of this number. In the figure, we show the average prediction of deaths across the posterior samples. The predictions are not perfect but do follow the 45-degree line closely.

The right panel shows the (log odds of the) fraction of people per region/year indicating they went without treatment (for a while) because it was too expensive. The difference between this panel compared to the one on the left is that this fraction does not vary by gender and age. Hence, we do not have a prediction for each “row in our data”. The right panel shows the observed and predicted fraction for TooExp per region/year. The dots indicate the average posterior prediction of this log-odds ratio. For small observed values of TooExp (log-odds below \(-5\) in the figure) there is a range of predicted values. Although this range seems wide in log-odds space, both the observed and predicted values are equal to zero. To illustrate, for practical purposes it does not matter if a probability equals 0.0001 (log-odds of \(-9\)) or 0.002 (log-odds of \(-6\)): both values are basically zero. Moreover, given our log-odds specification, the model cannot predict an exact zero probability.

A related observation is that in the data TooExp equals 0 for a number of region/year combinations. To handle this numerically, we use a lower bound for the log-odds. This corresponds to a probability of 0.0001 which is close enough to zero for our purposes. The right panel shows this bunching for a number of observations slightly below \(-9\). The bunching for other values of observed log-odds between \(-5\) and \(-7\) corresponds to regions reporting rounded fractions of \(0.001,0.002\) etc.

Compared to the observed number of deaths, the predictions for TooExp seem less accurate. This is to be expected as there are (a lot) fewer observations for this variable compared to mortality. But all in all the fit does not seem unreasonable as the points cluster around the 45-degree line.

Figure 5: Fit of estimated and observed mortality across all observations and observed and predicted fraction of people indicating TooExp across NUTS 2 regions.

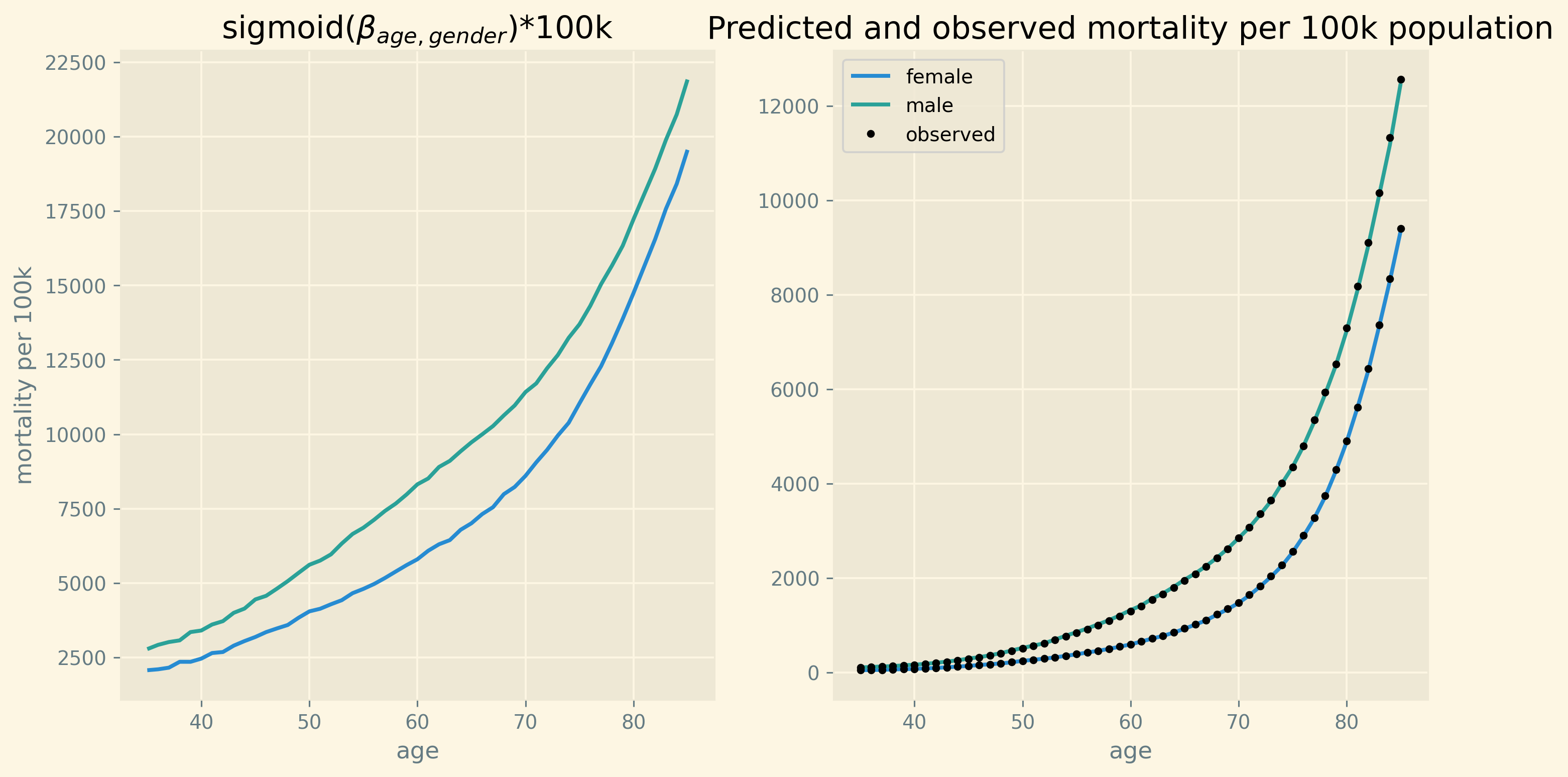

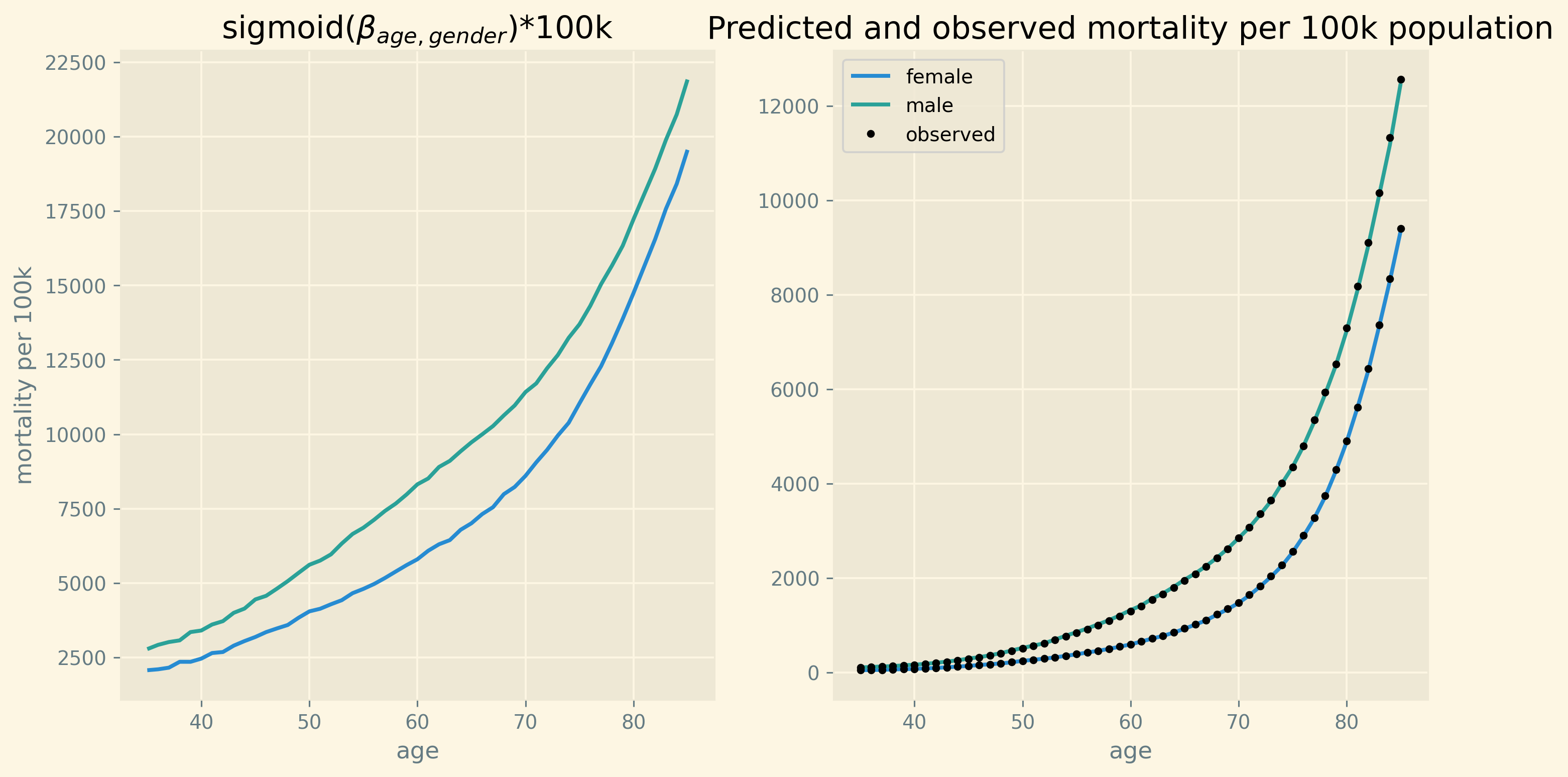

Another way to check how well the model fits, is to see how well it captures the age profile of mortality. This we present in Figure 6. The left panel shows the age profile \(e^{\beta_{ag}}/(1+e^{\beta_{ag}})\). If the other terms in equation \eqref{orgc7f7f41} equal 0, \(e^{\beta_{ag}}/(1+e^{\beta_{ag}})\) gives the probability of death for age/gender category \(ag\). The right panel includes for every region and calendar year the correction on \(e^{\beta_{ag}}/(1+e^{\beta_{ag}})\) to yield mortality for that combination of age/gender/region/year. On average, the model captures the age profile perfectly.

Figure 6: Fit of average mortality by age

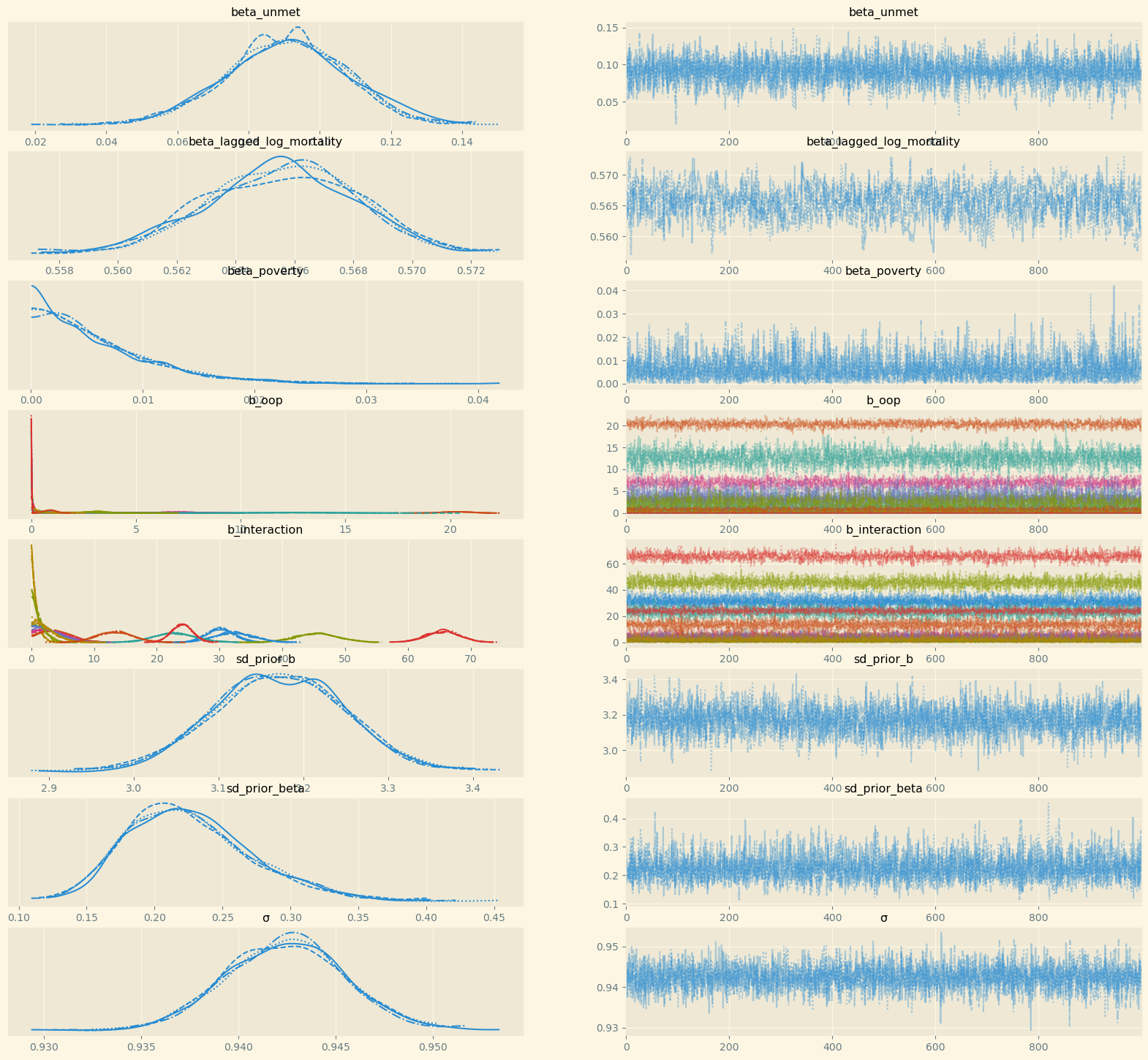

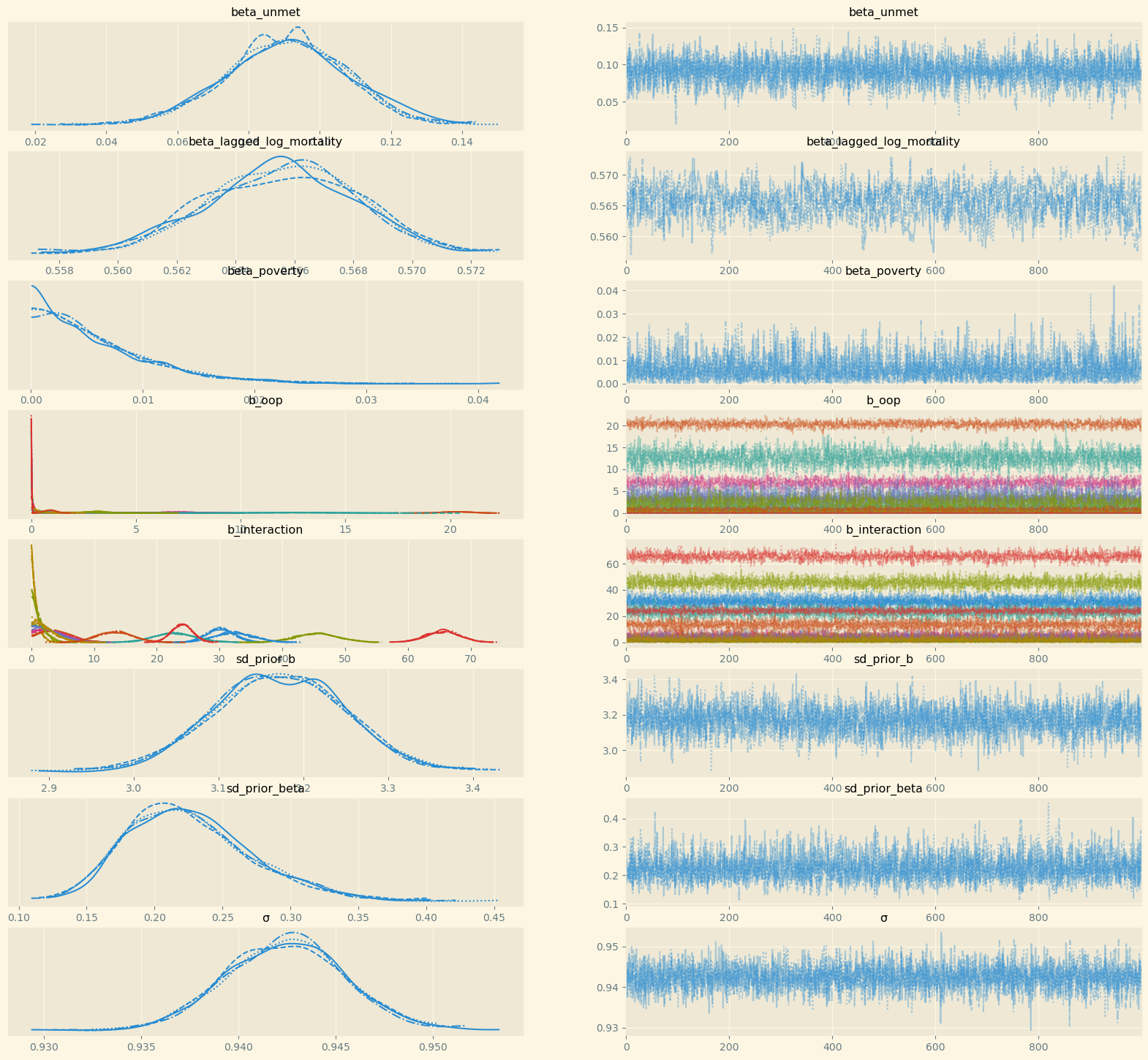

The appendix presents two further checks of the model. Figure 11 shows the trace plots for the parameters of interest. The figures in the left panel show the posterior distribution of the parameters. The coefficients b_oop, b_interaction vary by country and hence we have different colors for the country specific distributions in these graphs. The beta parameters do not vary with country (or another index) and hence there is one color only. In the beta figures it is easy to see that there are four distributions per parameter. These correspond to the four chains that are sampled by the NUTS algorithm.

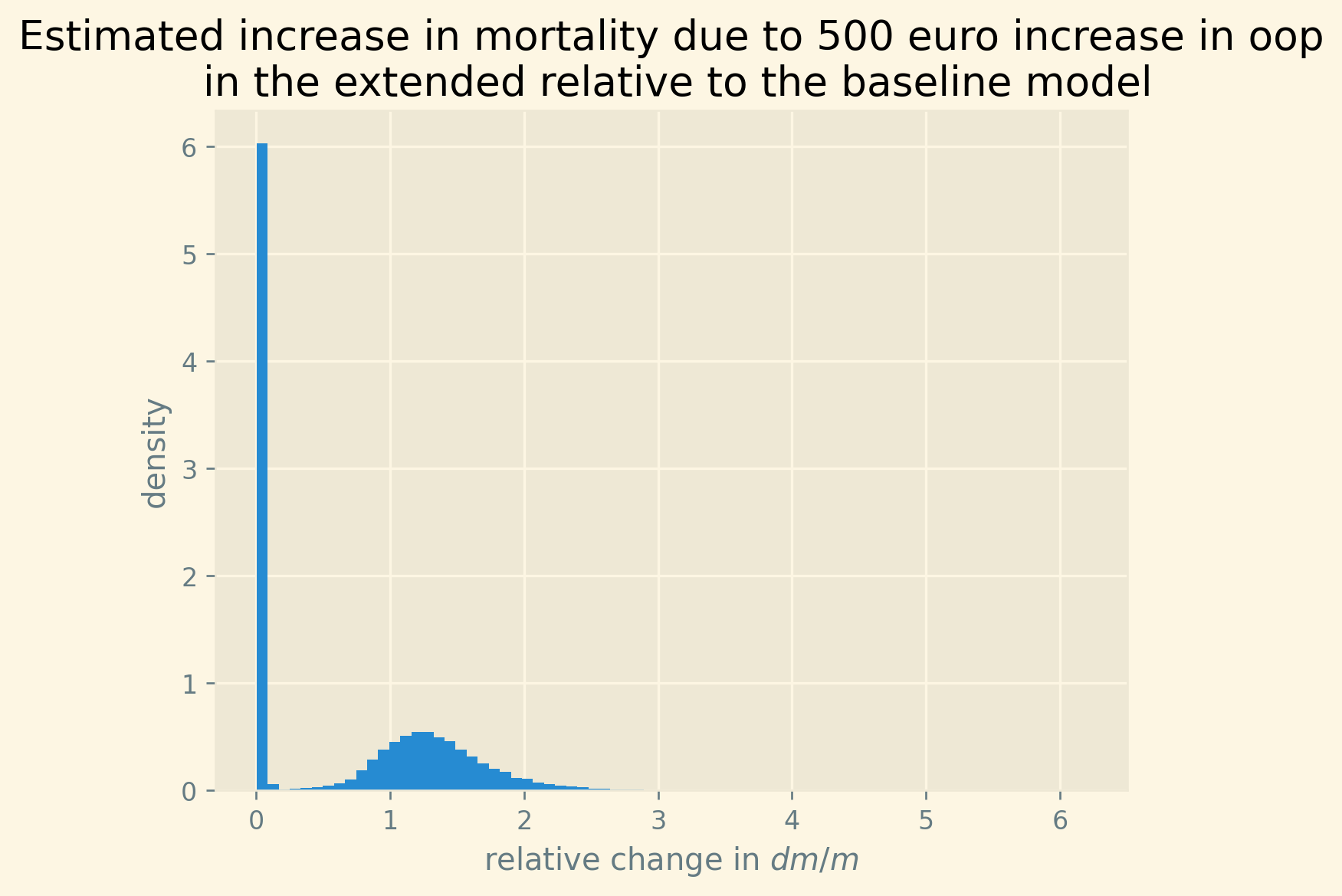

The right panels show the same samples but now ordered across the horizontal axis as they were drawn. We check these plots for the following three features. First, the plot should be stationary; that is, not trending upward or downward. This implies that the posterior mean of the coefficient is (more or less) constant as we sample. Second, there should be good mixing which translates in condensed zig-zagging. In other words, the algorithm manages to draw values across the whole domain of the posterior quickly one after the other. Finally, the four chains cover the same regions. All three features are satisfied for the coefficients in the right panel of the figure.